The field of holographic imaging has always faced challenges when it comes to capturing clear and accurate images in dynamic environments. Traditional deep learning methods have struggled to adapt to the diverse conditions encountered in real-world scenes. However, researchers at Zhejiang University have made significant progress in this area by exploring the intersection of optics and deep learning. They have discovered the crucial role of physical priors in aligning data and pre-trained models, leading to the development of an innovative method called TWC-Swin. This method tackles the distortion caused by spatial coherence and turbulence in holographic imaging, ultimately producing high-quality holographic images.

The Importance of Spatial Coherence

Spatial coherence plays a fundamental role in holographic imaging. It refers to the orderly behavior of light waves, which allows for clear and detailed holographic images. When light waves become chaotic, the holographic images tend to become blurry and noisy, as they carry less information. It is therefore crucial to maintain spatial coherence in order to achieve accurate holographic imaging.

Dynamic environments, such as those with oceanic or atmospheric turbulence, introduce variations in the refractive index of the medium. This disrupts the phase correlation of light waves and distorts spatial coherence. As a result, holographic images may become blurred, distorted, or even lost. The researchers at Zhejiang University recognized these challenges and set out to find a solution.

The TWC-Swin Method

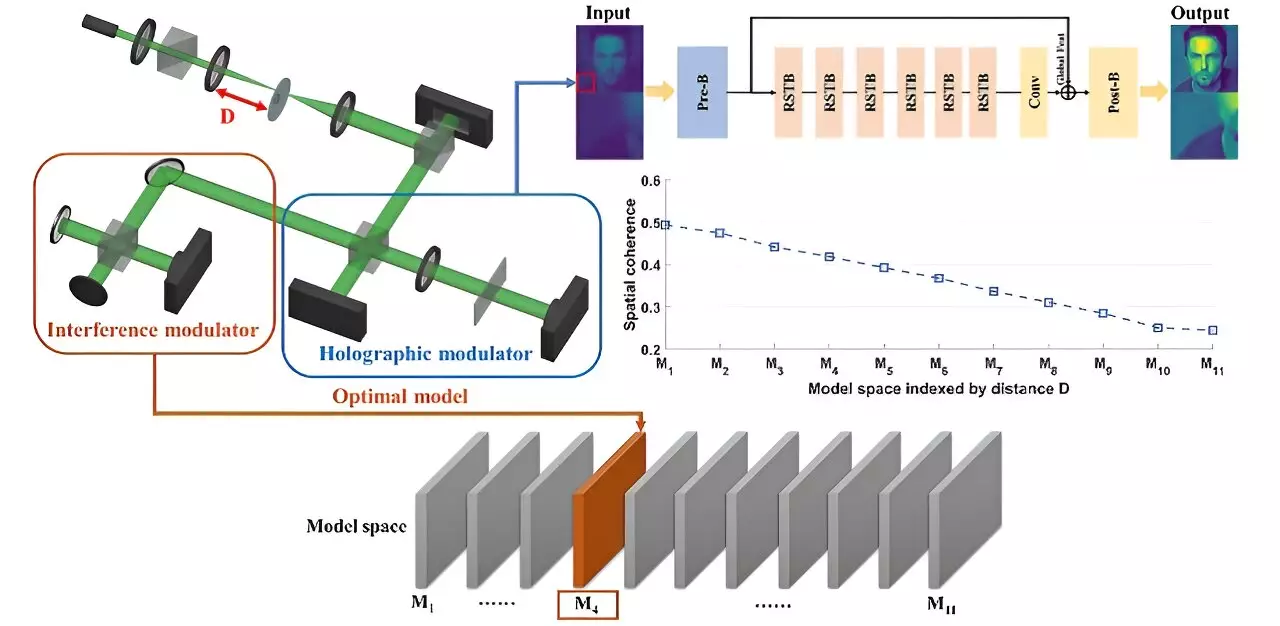

To address the challenges posed by dynamic environments, the researchers developed the TWC-Swin method. TWC-Swin stands for “train-with-coherence swin transformer” and leverages spatial coherence as a physical prior to guide the training of a deep neural network. This network is based on the Swin transformer architecture, which excels at capturing both local and global image features. The researchers tested their method by designing a light processing system that produced holographic images with varying spatial coherence and turbulence conditions. These holograms served as training and testing data for the neural network.

The results of the research demonstrate that TWC-Swin effectively restores holographic images even under low spatial coherence and arbitrary turbulence. In fact, it surpasses traditional convolutional network-based methods in terms of image quality. Additionally, the method exhibits strong generalization capabilities, meaning it can be applied to unseen scenes that were not included in the training data. This breakthrough research sets a new standard in addressing image degradation in holographic imaging across diverse scenes.

The Synergy between Optics and Computer Science

One of the major contributions of this research is the successful integration of physical principles into deep learning. By incorporating physical priors such as spatial coherence into the training process, the researchers have achieved remarkable results in holographic imaging. This highlights the potential of a synergistic relationship between optics and computer science.

The Future of Holographic Imaging

As the field of holographic imaging continues to evolve, this research opens up new possibilities for enhanced image quality and clarity. The TWC-Swin method has paved the way for overcoming the challenges posed by spatial coherence and turbulence, allowing us to see clearly even in the presence of dynamic environments. With further advancements in coherent deep learning, holographic imaging will undoubtedly reach new heights, revolutionizing the way we visualize and interact with three-dimensional images.

Leave a Reply