Deep neural networks (DNNs) have revolutionized data analysis and present immense promise for scientific research across various fields. Recent studies at the Massachusetts Institute of Technology (MIT) have focused on the neural scaling behavior of DNN-based models used to generate advantageous chemical compositions and learn interatomic potentials. This article delves into their groundbreaking research and how it paves the way for faster drug discovery and enhanced chemistry research.

Before delving into the research conducted by Nathan Frey and his team, it is essential to understand the key inspiration behind their study. Kaplan et al.’s paper on “Scaling Laws for Neural Language Models” served as the primary motivation for this project. This paper highlighted the predictable improvements in model training when increasing the size of neural networks and the amount of data used for training. Frey and his team aimed to explore how this concept of “neural scaling” applies to models trained on chemistry data.

The Research Approach

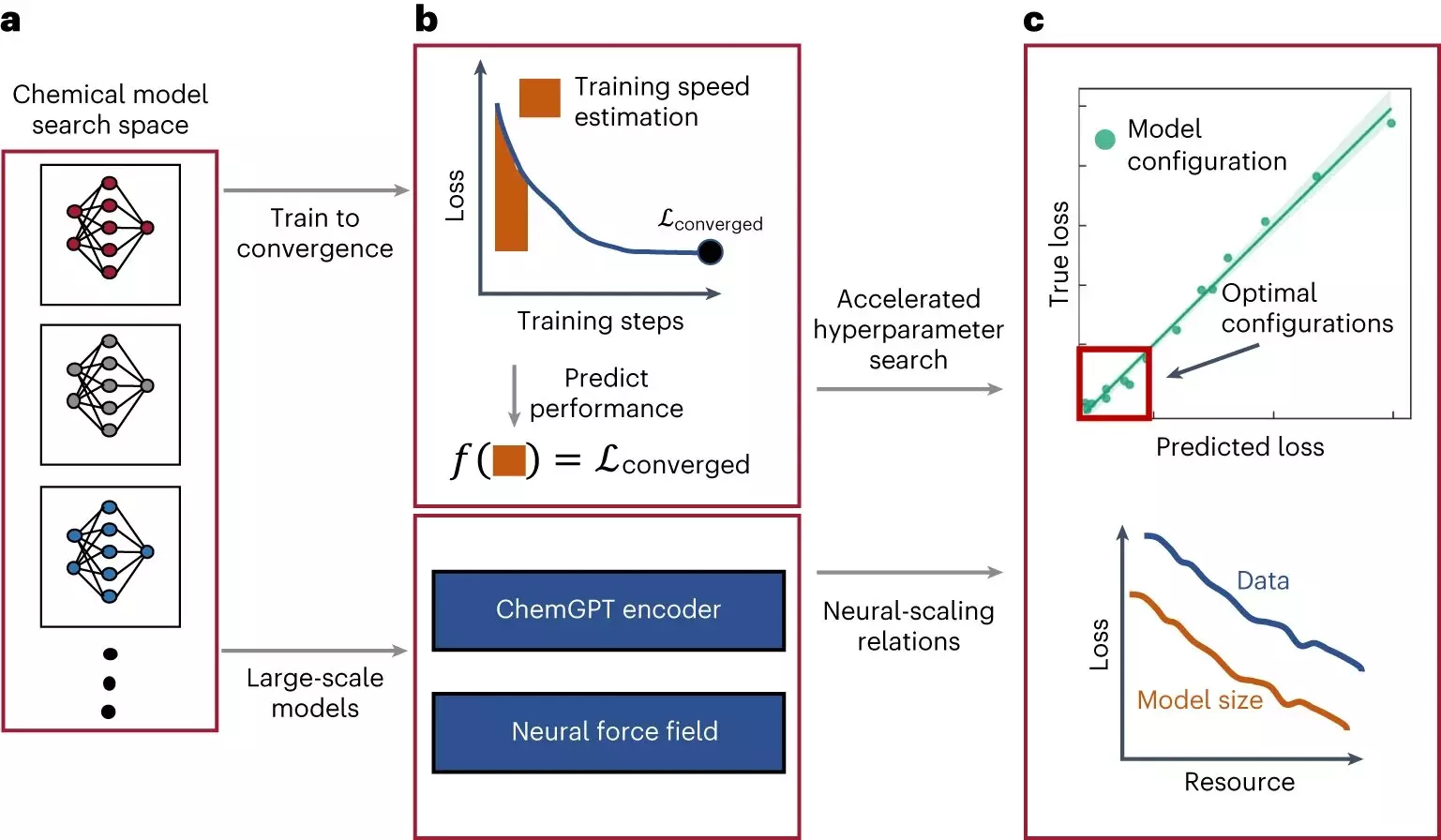

Beginning in 2021, Frey and his colleagues embarked on their research project with a focus on upscaling DNNs. They chose to study two distinct types of models for chemical data analysis: a large language model (LLM) and a graph neural network (GNN)-based model. The LLM, called ‘ChemGPT,’ used an autoregressive approach and aimed to predict the next token in a molecule’s string representation. On the other hand, the GNNs were trained to predict the energy and forces of a molecule.

Unlocking Neural Scaling

To analyze the scalability of ChemGPT and GNNs, Frey’s team explored the effects of model size and dataset size on crucial metrics. This allowed them to identify the rate at which these models improve as they become larger and receive more training data. The results were remarkable, demonstrating neural scaling behavior in both chemical models. This behavior is reminiscent of the observed scaling patterns in LLM and vision models for various applications.

One of the exciting findings of this research is the absence of any fundamental limit for scaling chemical models. This means that there is significant untapped potential to further investigate with more computational power and larger datasets. Additionally, the incorporation of physics into GNNs through equivariance, a property that affects scaling efficiency, yielded exceptional results. This discovery holds promise for enhanced scaling behavior, which is notoriously challenging to achieve in algorithm development.

New Perspectives in Chemistry Research

The groundbreaking work conducted by Frey and his team sheds new light on the potential of AI models within chemistry research. By showcasing the performance improvements achieved through neural scaling, this study opens up avenues for additional research and improvement of these models. Furthermore, it sparks curiosity regarding the efficacy of other DNN-based techniques for specific scientific applications.

DNNs have emerged as powerful tools for analyzing vast amounts of data, accelerating research endeavors across various scientific domains. Frey and his team at MIT have successfully highlighted the immense potential of scaling DNN-based models for chemistry research. By leveraging the concepts of neural scaling and incorporating physics into models, their research unlocks new possibilities and sets the stage for future advancements. The future of deep neural networks in chemistry research is undoubtedly bright, with abundant opportunities for further exploration and innovation.

Leave a Reply