Creating brain-like systems for machine learning poses numerous challenges. While artificial neural networks excel at learning complex tasks, the training process is slow and power-intensive. Moving towards analog systems can reduce time and power consumption, but small errors can quickly escalate. The current electrical network developed at the University of Pennsylvania can only handle linear tasks, limiting its capabilities.

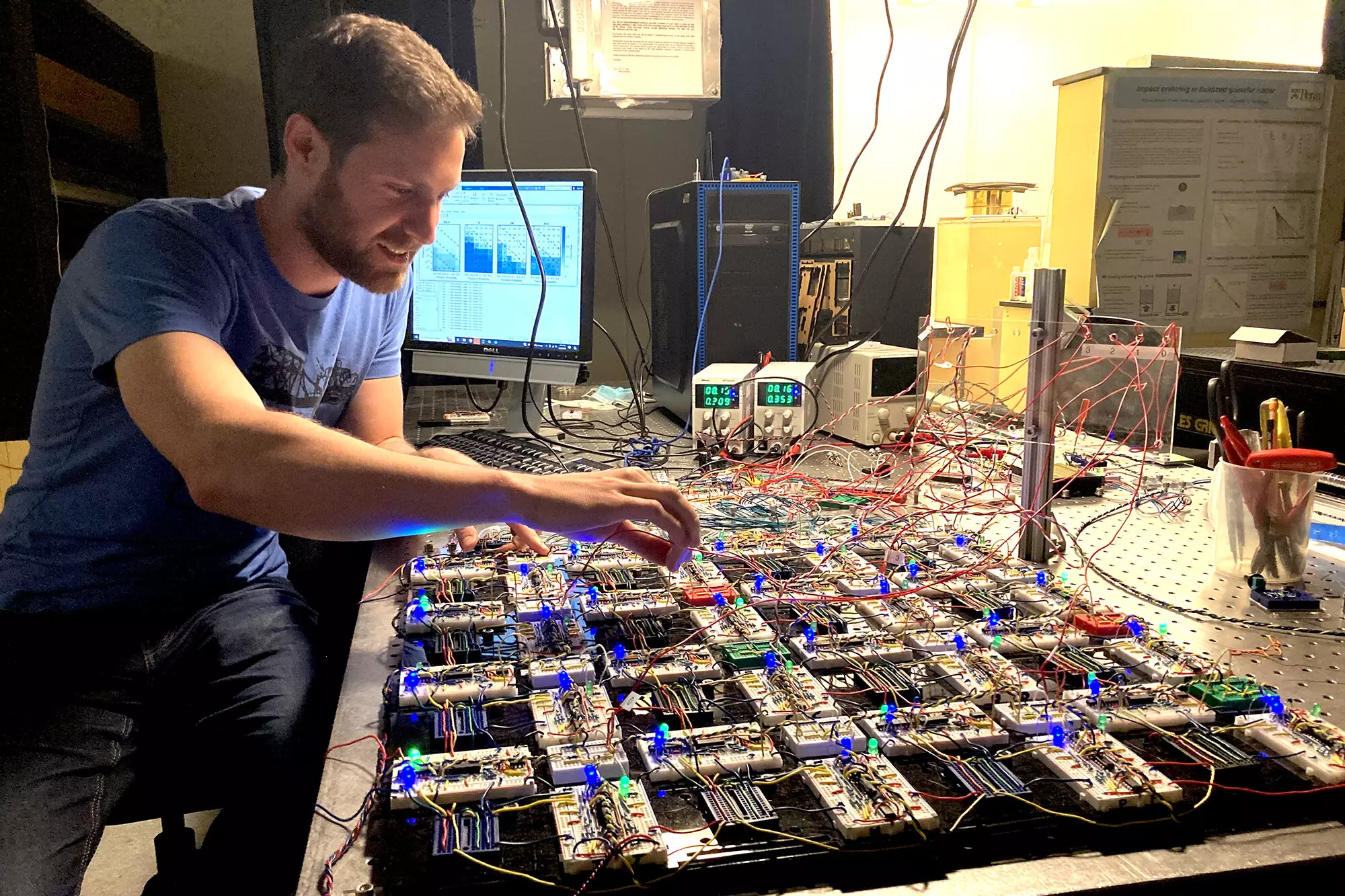

Researchers have now introduced a contrastive local learning network that addresses the shortcomings of previous systems. This analog system is fast, low-power, scalable, and capable of learning complex tasks, including XOR relationships and nonlinear regression. The network evolves independently based on local rules, resembling the emergent learning observed in the human brain.

One of the key advantages of the contrastive local learning network is its robustness to errors. Unlike traditional machine learning systems, this network has no knowledge of its structure, making it highly tolerant to variations in design. This flexibility opens up new possibilities for scaling up the system without compromising performance.

The researchers believe that studying the contrastive local learning network can provide valuable insights into biological processes. By leveraging simpler dynamics and modular components, this system offers a unique opportunity to understand emergent learning. The precise trainability and simplicity of this network make it a promising model for studying various biological and computational problems.

Beyond its implications for machine learning, the contrastive local learning network has practical applications in interfacing with data collection devices like cameras and microphones. The system’s ability to autonomously adapt to inputs and learn tasks could revolutionize how data is processed in various applications.

As the research progresses, the team is focused on scaling up the design and exploring key questions about memory storage, noise effects, network architecture, and nonlinearity optimization. By building on the foundation laid by the Coupled Learning framework, the researchers aim to unlock the full potential of contrastive local learning networks in advancing machine learning capabilities.

The development of contrastive local learning networks represents a significant milestone in the field of machine learning. By combining the principles of neural networks with analog systems, researchers have created a versatile and adaptive learning model with far-reaching implications. As this technology continues to evolve, it has the potential to transform how we approach complex tasks and data processing in the digital age.

Leave a Reply