In recent years, the burgeoning field of speech emotion recognition (SER) has benefitted immensely from the advances in deep learning methodologies. These technologies not only facilitate high accuracy in emotion detection but also expand the horizons of various applications ranging from customer service to mental health monitoring. However, as with any promising technology, SER systems are not without their vulnerabilities. A critical aspect of these vulnerabilities stems from the susceptibility of deep learning models to adversarial attacks, which can significantly undermine their performance.

Adversarial attacks are manipulative inputs designed to confuse machine learning systems and lead them to produce incorrect outputs. Research conducted by a team from the University of Milan sheds light on the stark implications of these attacks on SER models, particularly those employing convolutional neural networks (CNN) intertwined with long short-term memory (LSTM) architectures. The study, published in *Intelligent Computing*, methodically evaluated both white-box and black-box attack strategies across various languages and genders.

White-box attacks exploit complete knowledge of the model’s architecture and parameters, allowing attackers to generate adversarial examples with focused precision. In contrast, black-box attacks function with limited information, relying solely on the model’s output to inform their strategy. The study revealed that despite the inherent disadvantages, some black-box attacks were remarkably effective, raising alarming concerns about the security of SER systems.

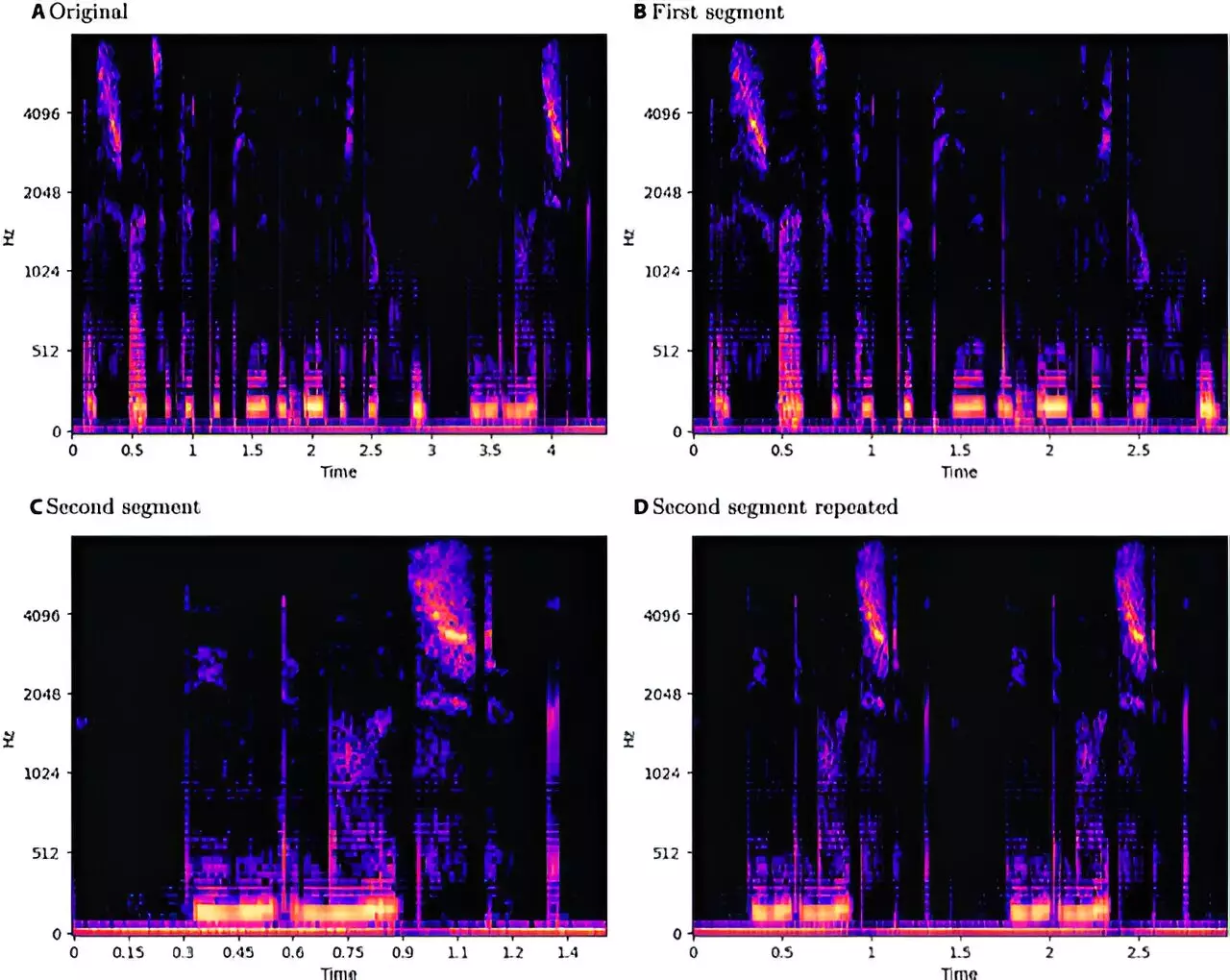

The researchers scrutinized the impacts of various adversarial attack techniques on three distinct datasets: EmoDB for German, EMOVO for Italian, and RAVDESS for English. Techniques such as the Fast Gradient Sign Method and Carlini and Wagner were employed for white-box attacks, while black-box scenarios made use of One-Pixel Attack and Boundary Attack methodologies. To ensure that their evaluations were grounded in solid scientific practices, they established a standardized pipeline for audio data processing combined with feature extraction tailored to their CNN-LSTM architecture.

The results were illuminating. It was found that all types of adversarial attacks significantly compromised the performance of SER models, with notable variations across languages; English displayed a heightened vulnerability, whereas Italian demonstrated resistance. Moreover, while the model’s performance was generally lower for male speech samples, the difference was rather marginal. The consistent methodology across the three datasets fortified the credibility of the findings, emphasizing an urgent need for addressing these vulnerabilities.

The implications of this research are twofold. On one hand, the study reveals critical vulnerabilities, which serve as a wake-up call for developers and researchers in the SER domain. The fact that black-box attacks can yield noteworthy successes with minimal understanding of the underlying models presents a substantial security threat. On the other hand, the researchers argue for a balanced approach where such vulnerabilities are openly shared, as transparency may serve as a catalyst for building stronger, more resilient systems.

The authors highlighted that not disclosing findings related to vulnerabilities could be counterproductive, impeding efforts to fortify systems against potential threats. By making these vulnerabilities public, researchers and practitioners can engage in collaborative defenses, innovating solutions that bolster defenses against adversarial inputs.

As SER technologies continue to evolve, a crucial aspect of their development must center around enhancing their robustness against adversarial attacks. Future research could focus on developing more intricate models that can better withstand various forms of threats, possibly incorporating diverse training data that includes adversarial examples during the training phase.

While speech emotion recognition represents a significant frontier for artificial intelligence applications, the vulnerabilities exposed by adversarial attacks cannot be underestimated. As we forge ahead in this vital domain, it becomes imperative that researchers, developers, and policymakers collaborate to build systems that are not only effective but also secure against exploitation. The dialogue surrounding ethics, transparency, and resilience in technology must remain at the forefront of this evolving field.

Leave a Reply