In recent years, computer scientists have made significant progress in developing deep neural networks (DNNs) for various real-world applications. However, some studies have highlighted the unfairness of these models, with performance discrepancies based on training data and hardware platforms. Particularly, biases in facial recognition algorithms have raised concerns about the need for fair AI systems. Researchers at the University of Notre Dame have explored how hardware systems, such as computing-in-memory (CiM) devices, can influence the fairness of DNNs.

While much attention has been given to algorithmic fairness, the role of hardware in shaping fairness has been overlooked. The study by Shi and his team aimed to bridge this gap by investigating how emerging hardware designs, specifically CiM architectures, affect the fairness of AI models. Through a series of experiments, the researchers discovered that hardware has a significant impact on the fairness of neural networks. For example, larger and more complex models tend to exhibit greater fairness, but they pose challenges in deployment on resource-constrained devices.

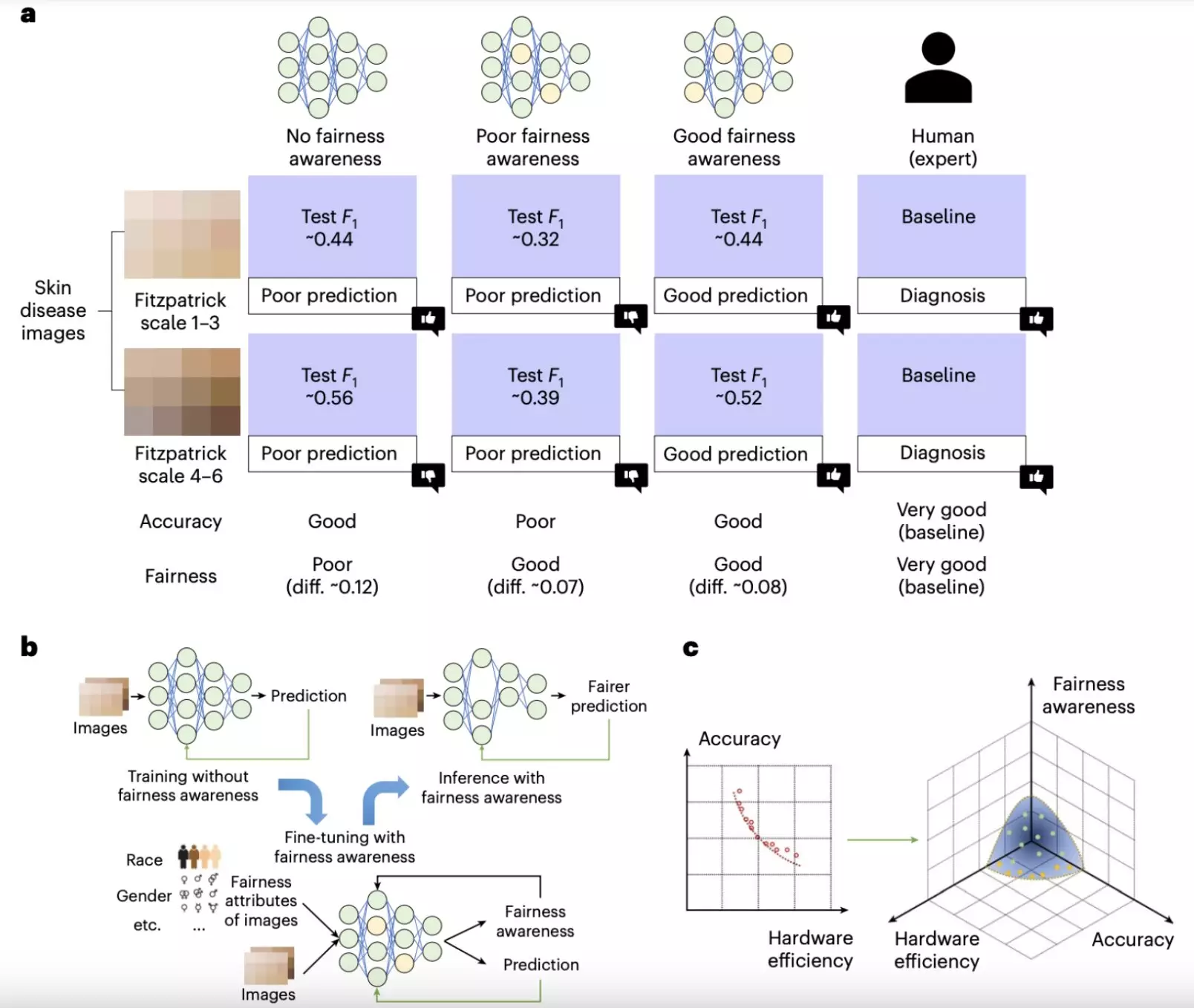

The researchers proposed strategies to improve the fairness of AI models without compromising computational efficiency. One such strategy involves compressing larger models to reduce computational load while maintaining performance. Additionally, the study examined non-idealities in hardware platforms, such as device variability and processing issues, and recommended noise-aware training strategies to enhance the robustness and fairness of AI models. These findings underscore the importance of considering hardware factors in achieving a balance between accuracy and fairness in AI systems.

Implications for Future AI Development

The research by Shi and his colleagues sheds light on the critical role of hardware in ensuring the fairness of AI models. By addressing hardware-induced biases and limitations, developers can create more equitable AI systems for sensitive applications like medical diagnostics. Moving forward, the researchers plan to explore cross-layer co-design frameworks that optimize neural network architectures for fairness while considering hardware constraints. This holistic approach to AI development could lead to the creation of new hardware platforms designed to support fairness and efficiency simultaneously.

The findings of the study emphasize the interconnectedness of hardware and algorithmic fairness in AI models. By incorporating hardware considerations into AI development processes, researchers and developers can mitigate biases and promote equitable outcomes. The ongoing research in this field holds promise for the creation of AI systems that are both accurate and fair, benefiting diverse user groups across different contexts. Ultimately, the integration of hardware-aware design principles is essential to advancing the field of AI and achieving fair and reliable outcomes.

Leave a Reply