The advancement of artificial intelligence (AI) has revolutionized various industries, but it also brings forth a chilling aspect – the rise of deepfake images. These AI-generated images have caught the attention of many due to their ability to create humorous scenarios, such as Arnold Schwarzenegger’s face superimposed on Clint Eastwood or Mike Tyson transformed into Oprah. However, recent developments have shown a disturbing trend as digital fakery turns malicious.

Although some deepfakes may generate laughter, they have also become a tool for deception and manipulation. The misuse of deepfake technology poses serious threats to individuals and society as a whole. Tom Hanks, a renowned actor, recently had to denounce the unauthorized use of his AI-generated likeness in an advertisement promoting a dental health plan. The influential YouTuber Mr. Beast, with billions of video views, was falsely portrayed offering iPhone 15 Pros for a ridiculously low price.

Furthermore, ordinary citizens have become victims of this digital deception. People’s faces are being inserted into images on social media without their consent, exposing them to potential harm and privacy violations. The most disturbing cases involve “revenge porn,” where malicious individuals post fabricated explicit images of their former partners as a means of revenge. As the United States approaches a highly contentious presidential election in 2024, the prospect of forged imagery and videos promises unprecedented ugliness in the political landscape.

Beyond personal harm, the proliferation of deepfake images could significantly impact the legal system. A National Public Radio (NPR) report highlights how lawyers are increasingly challenging evidence produced in court, capitalizing on a confused public grappling with the blurred boundaries of truth and falsehood. Hany Farid, an expert in digital image analysis at the University of California, Berkeley, warned about the erosion of reality in the age of deepfakes, stating, “That’s exactly what we were concerned about, that when we entered this age of deepfakes, anybody can deny reality.” The term “classic liar’s dividend” has been coined to describe this phenomenon.

The responsibility to counter disinformation lies with major digital media companies such as OpenAI, Alphabet, Amazon, and DeepMind. They have pledged to develop tools and technologies to combat the spread of fake content. Watermarking AI-generated content has emerged as a key approach to identify manipulated images. However, recent research from the University of Maryland demonstrates the shortcomings of current watermarking methods. Soheil Feizi, an author of the study, revealed that the team successfully bypassed protective watermarks, raising concerns about the effectiveness of these measures.

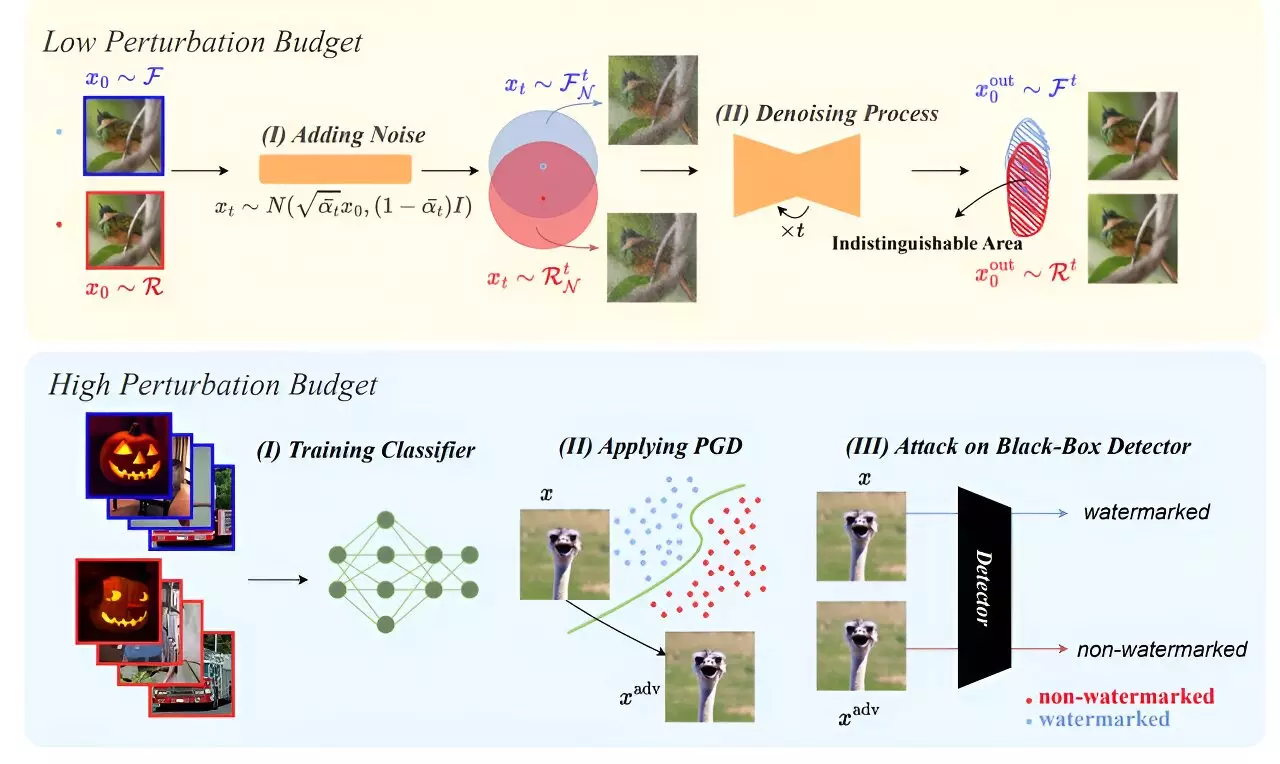

Feizi’s team employed diffusion purification, a technique that applies Gaussian noise to a watermark, renders it removable while leaving only minimal alterations to the rest of the image. They also showcased how bad actors can exploit black-box watermarking algorithms to create fake photos that deceive detection systems. This highlights the constant struggle between those who create better defense mechanisms and those who seek to break them.

While Feizi expressed cautious optimism, stating that designing a robust watermark is a challenging but not an impossible task, the current reality demands personal vigilance and scrutiny. When reviewing images that carry significance or potential harm, individuals must exercise due diligence, double-check sources, and apply a healthy dose of common sense.

The proliferation of deepfake images poses significant challenges to society. Not only do these manipulated images carry the potential for personal harm and privacy violations, but they also threaten the integrity of evidence in the legal system. The battle against deepfakes requires the collaborative efforts of technology companies, researchers, and individuals alike. As technology continues to evolve, it is essential to develop robust solutions that can identify and mitigate the impact of deepfake images. Until then, individuals must be vigilant and discerning to protect themselves from the dangers posed by this burgeoning menace.

Leave a Reply