In an age dominated by artificial intelligence, language models have emerged as essential tools in various fields, from healthcare to education. Yet, the complexity of natural language processing means that even the most advanced models can struggle with specific questions. This limitation raises a pertinent question: how can we enhance the capabilities of general-purpose language models (LLMs) without overloading them with excessive data or intricate algorithms? Recent developments from researchers at MIT present an innovative solution through a new algorithm known as Co-LLM, which synthesizes the strengths of both generalized and specialized models to create a more efficient response mechanism.

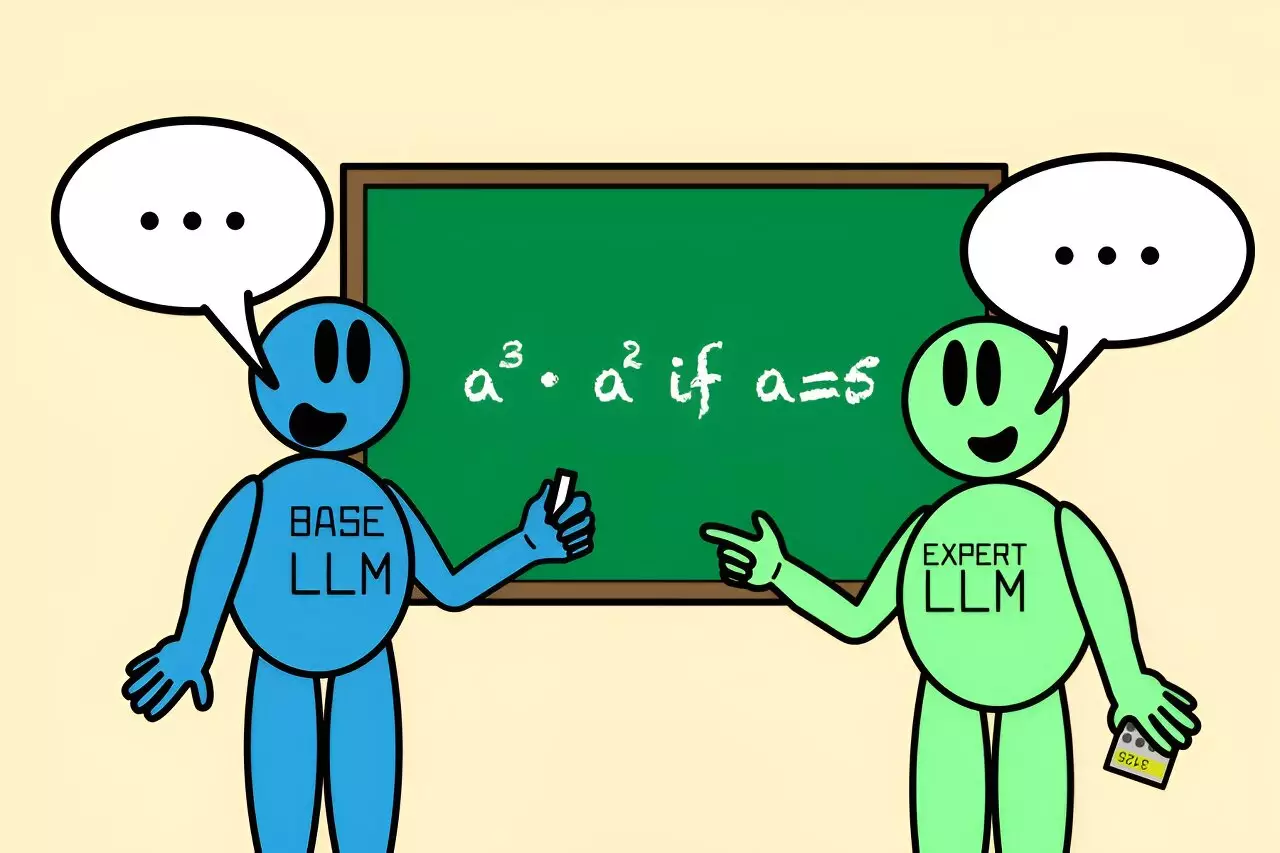

Consider a scenario where you’re tasked with answering a complex query but only have a fragment of the relevant information. In such cases, the most effective strategy might be to consult an expert. This principle underpins the collaborative approach of Co-LLM, which leverages a general-purpose LLM alongside a specialized counterpart. However, a significant hurdle exists: teaching LLMs to identify when they should rely on the expertise of another model. Traditional methods often involve cumbersome formulations and masses of labeled data. MIT’s CSAIL has taken a refreshing route by creating an organic collaboration framework that allows LLMs to work together seamlessly, thus improving their overall accuracy in a dynamic manner.

At the heart of this innovative approach lies the Co-LLM algorithm. It acts as a bridge between a general LLM and its specialized variant. As the general model generates responses, Co-LLM continuously evaluates the relevance of each word formed, determining when to invoke the expert model’s enhanced knowledge. This interaction not only produces richer, more accurate answers but also optimizes processing efficiency by invoking the expert only as needed.

Co-LLM employs a fascinating mechanism involving a “switch variable.” This machine-learning tool assesses the competency of each token in the responses activated by the two models. Think of it as a savvy project manager assessing who is best suited for each task. For example, if tasked with citing extinct species of bears, the general-purpose LLM initially outlines a response. The switch variable dynamically identifies points in the response that would benefit from the expert’s input, thereby refining the output with precise data such as the extinction dates of those species.

Shannon Shen, a lead researcher involved in this work at MIT, succinctly summarizes the essence of Co-LLM: enabling a general model to “call” on an expert model only when it encounters areas where it lacks sufficient knowledge. This mirrors human behavior, where we consult specialists for detailed inquiries. The algorithm leverages domain-specific training to cultivate this collaboration, making it particularly effective in fields like biomedicine and advanced mathematics.

The utility of Co-LLM extends across various domains, especially those necessitating precise information. Imagine asking a general LLM about the ingredients of a specific drug; it may err. However, with the integration of a specialized biomedical model, accuracy is dramatically improved. By testing Co-LLM against diverse data sets, such as BioASQ for medical queries, the researchers demonstrated its capacity to tackle questions that traditionally require deep expertise.

Furthermore, the potential of Co-LLM to guide models in problem-solving scenarios is impressive. For instance, when faced with a mathematical query, an initial miscalculation by a general LLM could be corrected with guidance from an expert mathematical model. This collaborative correction process is showcased in the example where a simple calculation was rectified, significantly enhancing the output’s reliability and correctness.

Future Directions for Co-LLM

MIT’s innovative approach doesn’t stop at merely improving existing interactions between models. Future advancements aim to incorporate human-like self-correction methods, enabling Co-LLM to backtrack and revise outputs when the expert’s information is flawed. This aspect of adaptability not only enhances the validity of the responses but also ensures that the models remain updated with current knowledge.

Moreover, by allowing the base model to train independently from the expert model, updates can seamlessly integrate new information, thus ensuring the most relevant data is utilized. Coupled with the potential to assist in maintaining enterprise documentation, the future of Co-LLM promises to enhance the efficiency and relevance of AI language models across industries.

The development of the Co-LLM algorithm signifies a pivotal advancement in the collaborative potential of language models. By mimicking human teamwork through dynamic interaction between specialized and general models, Co-LLM not only enhances the accuracy of AI-generated responses but also optimizes resource use. With future advancements on the horizon, Co-LLM stands to revolutionize how AI assists across various domains, offering a flexible and responsive framework tailor-made for the demands of modern information inquiry.

Leave a Reply