Quantum computers have shown great promise in potentially outperforming conventional computers in various information processing tasks, including machine learning and optimization. However, their widespread deployment is hindered by the presence of noise, leading to errors in computations. One proposed technique to tackle these errors is quantum error correction, which aims to detect and correct errors as they occur. On the other hand, quantum error mitigation takes a different approach by allowing the error-filled computation to run to completion before determining the correct result. While quantum error mitigation was initially seen as a more practical solution compared to error correction, recent research has revealed significant limitations in its scalability.

Recent studies by researchers from institutions including Massachusetts Institute of Technology, Ecole Normale Superieure in Lyon, University of Virginia, and Freie Universität Berlin have shed light on the inefficiencies of quantum error mitigation for large-scale quantum computers. The research indicates that as quantum computers grow in size, the resource demands and effort required for error mitigation also increase significantly. This raises concerns about the long-term viability of quantum error mitigation as a solution to noise in quantum computation.

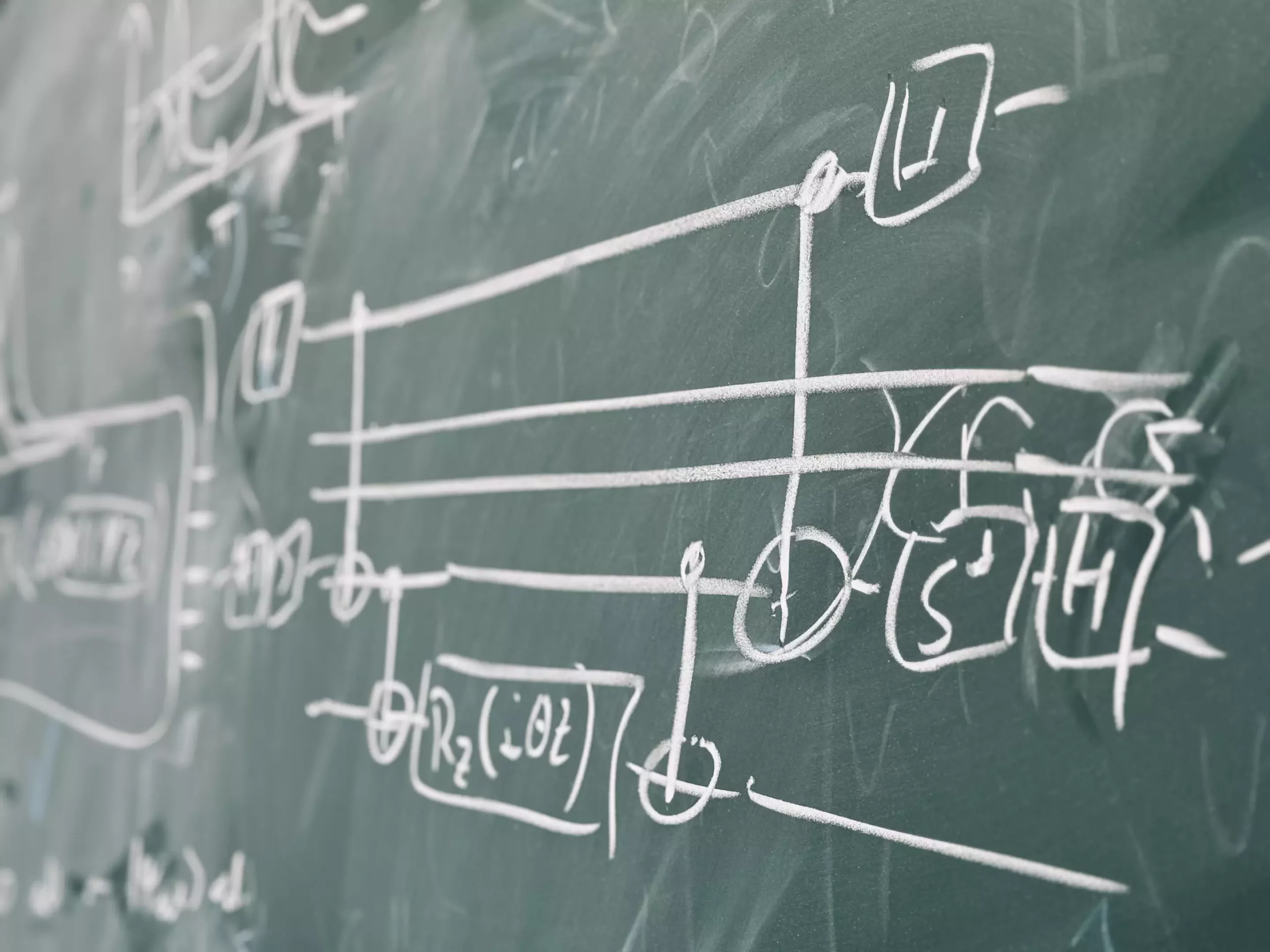

One example of an inefficient mitigation scheme highlighted in the research is ‘zero-error extrapolation.’ This method involves increasing the noise in a system to counteract noise levels, ultimately leading to a zero-noise scenario for computation. However, the scalability of this approach is questionable, as increasing noise within the system is not a sustainable solution for long-term quantum computing. Additionally, the presence of noisy quantum gates in quantum circuits poses a considerable challenge, as each layer of gates introduces errors that accumulate throughout the computation process. This results in a trade-off between the depth of a circuit for meaningful computation and the noise accumulation within the system.

The findings of the research team led by Yihui Quek and Jens Eisert suggest that quantum error mitigation faces inherent limitations that hinder its scalability. As quantum circuits become larger and more complex, the inefficiency of error mitigation becomes more pronounced, requiring a significantly higher number of computational runs. This raises questions about the practicality of error mitigation as a viable solution for noise in quantum computation. The research serves as a critical guide for quantum scientists and engineers, urging them to explore alternative approaches that may offer more effective mitigation strategies.

Moving forward, the researchers plan to focus on developing solutions to overcome the challenges identified in current quantum error mitigation techniques. By combining randomized benchmarking and innovative error mitigation strategies, there is potential to address the scalability issues and inefficiencies associated with error mitigation. The study opens up new avenues for research in quantum error mitigation, encouraging further exploration of theoretical aspects of mitigating noise in quantum circuits.

The research conducted by the team of scientists provides valuable insights into the limitations of quantum error mitigation for large-scale quantum computers. The inefficiencies highlighted in the study emphasize the need for innovative approaches to address noise in quantum computation effectively. By reevaluating current mitigation schemes and exploring alternative strategies, researchers can work towards enhancing the performance and reliability of quantum computers in the future.

Leave a Reply