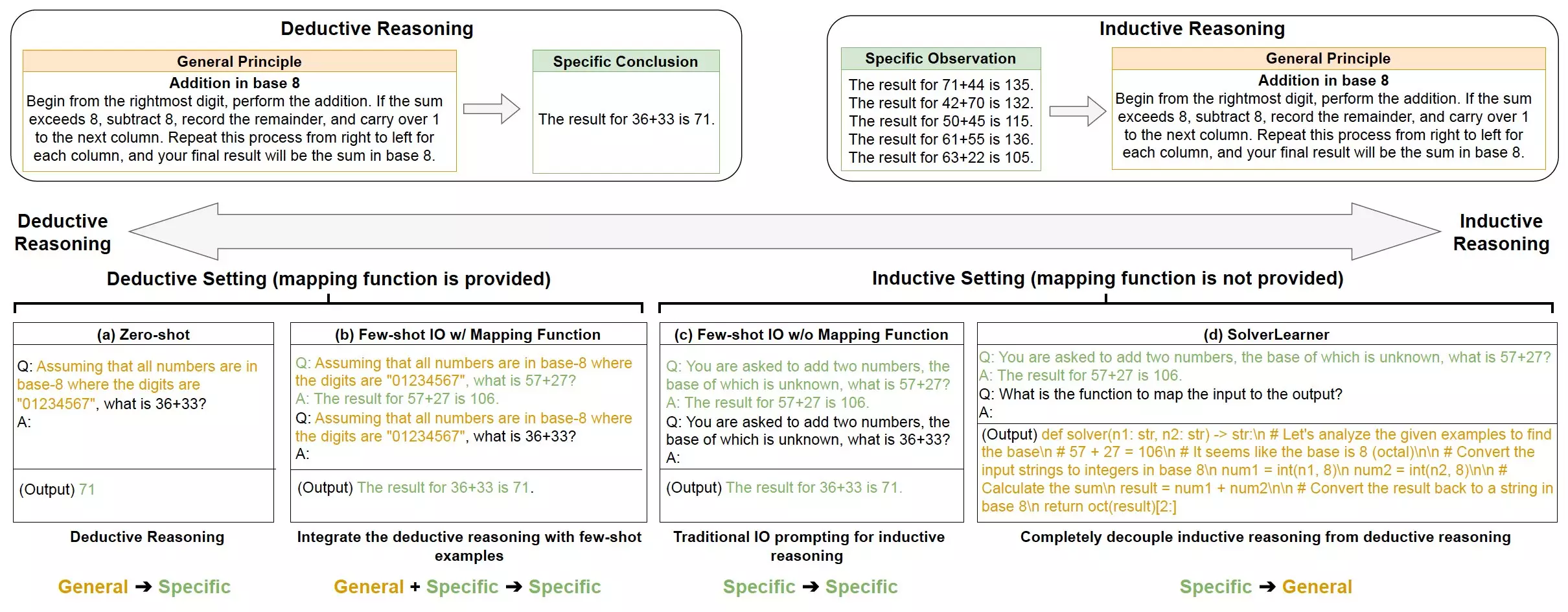

Reasoning is a fundamental process that allows humans to draw conclusions and solve problems based on the information available to them. There are two main types of reasoning that are commonly used: deductive reasoning and inductive reasoning. Deductive reasoning involves starting from a general rule or premise and then using this rule to draw conclusions about specific cases. For example, if we know that “all dogs have ears” and “Chihuahuas are dogs,” we can conclude that “Chihuahuas have ears.”

On the other hand, inductive reasoning involves generalizing based on specific observations. This means formulating general rules based on specific cases. For instance, assuming that all swans are white because all the swans we have seen so far were white. While human reasoning has been extensively studied, the extent to which artificial intelligence systems use deductive and inductive reasoning strategies is still relatively unexplored.

A recent study conducted by a research team at Amazon and the University of California Los Angeles delved into the fundamental reasoning abilities of large language models (LLMs). These large AI systems have the capacity to process, generate, and adapt texts in human languages. The study found that LLMs exhibit strong inductive reasoning capabilities but often struggle with deductive reasoning tasks. This has significant implications for the development and utilization of AI systems in various applications.

To better understand the reasoning abilities of LLMs, the researchers introduced a new model called SolverLearner. This model employs a two-step approach to differentiate between learning rules and applying them to specific cases. By leveraging external tools, such as code interpreters, the model aims to reduce reliance on the LLM’s deductive reasoning capabilities. Through the SolverLearner framework, the researchers trained LLMs to learn functions that map input data to outputs based on specific examples.

The study revealed that LLMs have stronger inductive reasoning capabilities compared to deductive reasoning abilities, particularly in scenarios involving “counterfactual” situations. This highlights the importance of understanding when and how to best utilize LLMs in various applications, such as agent systems like chatbots. Leveraging the strong inductive capabilities of LLMs can lead to improved performance in specific tasks and enhance overall AI development efforts.

As AI developers continue to explore the potential of LLMs in various domains, there is a need for further research to enhance their reasoning processes. Future studies could focus on how the ability of an LLM to compress information impacts its inductive reasoning capabilities. This perspective may offer valuable insights for improving the overall performance and functionality of AI systems in the future.

Reasoning plays a crucial role in the development and utilization of artificial intelligence systems. Understanding the strengths and weaknesses of reasoning capabilities in LLMs can guide AI developers in optimizing these systems for specific tasks. By leveraging inductive reasoning capabilities and addressing limitations in deductive reasoning, the potential for advancements in AI technology is vast.

Leave a Reply