Human emotions are an intricate web of feelings that can significantly affect our behavior, thoughts, and interactions. Unlike physical phenomena, emotions are subjective experiences that are often difficult to quantify. Every individual expresses and experiences emotions uniquely, making it challenging for both humans and machines to decode these nuances systematically. Researchers and innovators in the fields of psychology and artificial intelligence (AI) are striving to bridge this gap, employing various technological advancements to improve our understanding and quantification of human emotional states.

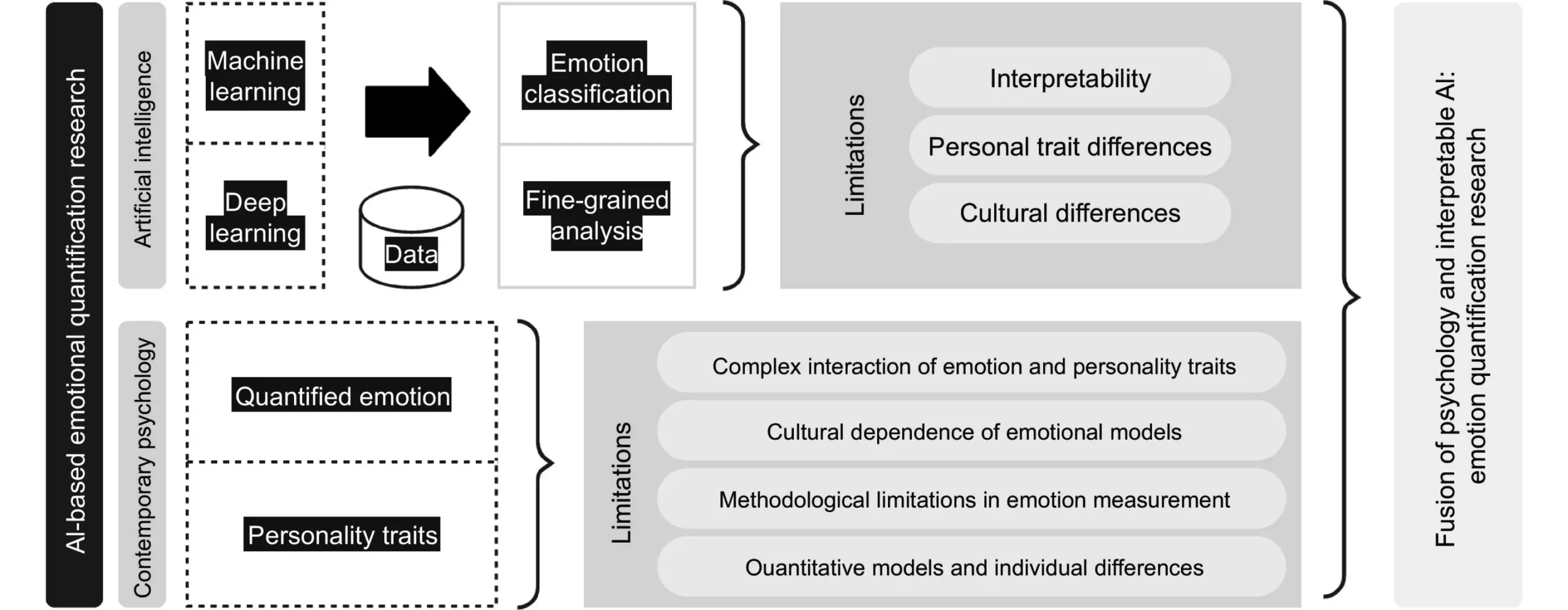

The fusion of AI with traditional psychological approaches heralds a new era in the field of emotion recognition. Through machine learning and natural language processing, AI can analyze patterns in human behavior that reveal emotional states. This technology goes beyond basic observations; it enables systems to interpret subtle cues from verbal and non-verbal communication. Gesture recognition, facial emotion recognition (FER), and even physiological data from biometric sensors offer a multi-dimensional approach to understanding emotions, providing a more complete picture than ever before.

Artificial intelligence serves as a powerful ally in the quest to decode emotions more effectively. For instance, AI can learn to recognize emotional cues from facial expressions or vocal intonations—information that is often overlooked in traditional psychological methods. Researchers are optimistic that advancements in computer vision and deep learning can enable machines to provide real-time analysis of emotional states, potentially applying this technology in various fields, including healthcare, education, and customer service.

Multimodal Emotion Recognition: A Holistic Approach

A significant innovation in this domain is the concept of multi-modal emotion recognition. This approach synthesizes data from multiple sources—visual, auditory, and even tactile—to create a comprehensive emotional profile of an individual. By integrating information from different sensory inputs, technologies can achieve a greater accuracy in identifying emotions, which is particularly valuable in therapeutic settings or when assessing mental health.

Consider an AI system equipped with the ability to analyze a person’s facial emotions, tone of voice, and physiological signals such as heart-rate variability. This multi-modal approach ensures that the AI captures the richness of human emotional experience, leading to more effective interactions and interventions. By moving away from a one-dimensional understanding of emotions, researchers aim to enhance the reliability of emotion quantification technology, thus paving the way for more personalized experiences.

Achieving meaningful advancements in emotional quantification requires collaboration across various disciplines. The integration of insights from psychology, psychiatry, and AI is fundamental to ensuring that these technologies respect the complexities of human emotions while offering practical solutions. As emphasized by researchers in this field, interdisciplinary collaboration can provide a comprehensive framework that respects cultural differences and personal nuances associated with emotional expression.

Moreover, this collaborative spirit extends to the ethical implications of using AI in emotionally sensitive contexts. When dealing with mental health, it’s crucial that the systems developed uphold principles of safety, transparency, and privacy. Standardized data handling practices are vital to maintain trust and reliability in AI technologies that strive to interpret human emotions accurately.

Challenges and Ethical Considerations

Despite the promising advancements in emotion recognition technologies, there are significant challenges to overcome. Ensuring the safety and transparency of AI systems becomes critical, especially in sensitive fields like healthcare and psychology. Concerns over data privacy and the manner in which emotional data is processed and used must be carefully managed. Furthermore, developing AI that accommodates the cultural variations in emotional expression is paramount for maintaining accuracy and relevance.

As we continue to explore the potential of AI for emotion quantification, it is vital to approach this technology with caution, ensuring that ethical considerations and human dignity remain at the forefront. The future of emotion recognition technology holds immense potential, promising to enhance the way we understand and interact with one another, while also providing critical insights for mental health support and personalized interactions.

As researchers refine their approaches and develop more sophisticated technologies, the field of emotion quantification stands poised for a transformative leap forward. The integration of traditional psychological methods with innovative AI technologies may well lead us toward a more nuanced understanding of the most human aspect of our existence—our emotions.

Leave a Reply