The rise of Large Language Models (LLMs) has been a game-changer in the field of artificial intelligence and natural language processing. These sophisticated architectures have the ability to process, generate, and manipulate text in various human languages, making them incredibly versatile tools in a wide range of applications.

While LLMs have shown incredible potential in generating highly convincing text, they are not without their flaws. One of the major issues that researchers have identified is the occurrence of hallucinations. These hallucinations refer to instances where an LLM generates responses that are entirely incoherent, inaccurate, or inappropriate.

Researchers at DeepMind have recently developed a novel approach to tackle the issue of hallucinations in LLMs. The team proposed a procedure that allows LLMs to evaluate their own potential responses and identify instances where it may be more appropriate to refrain from answering a query altogether.

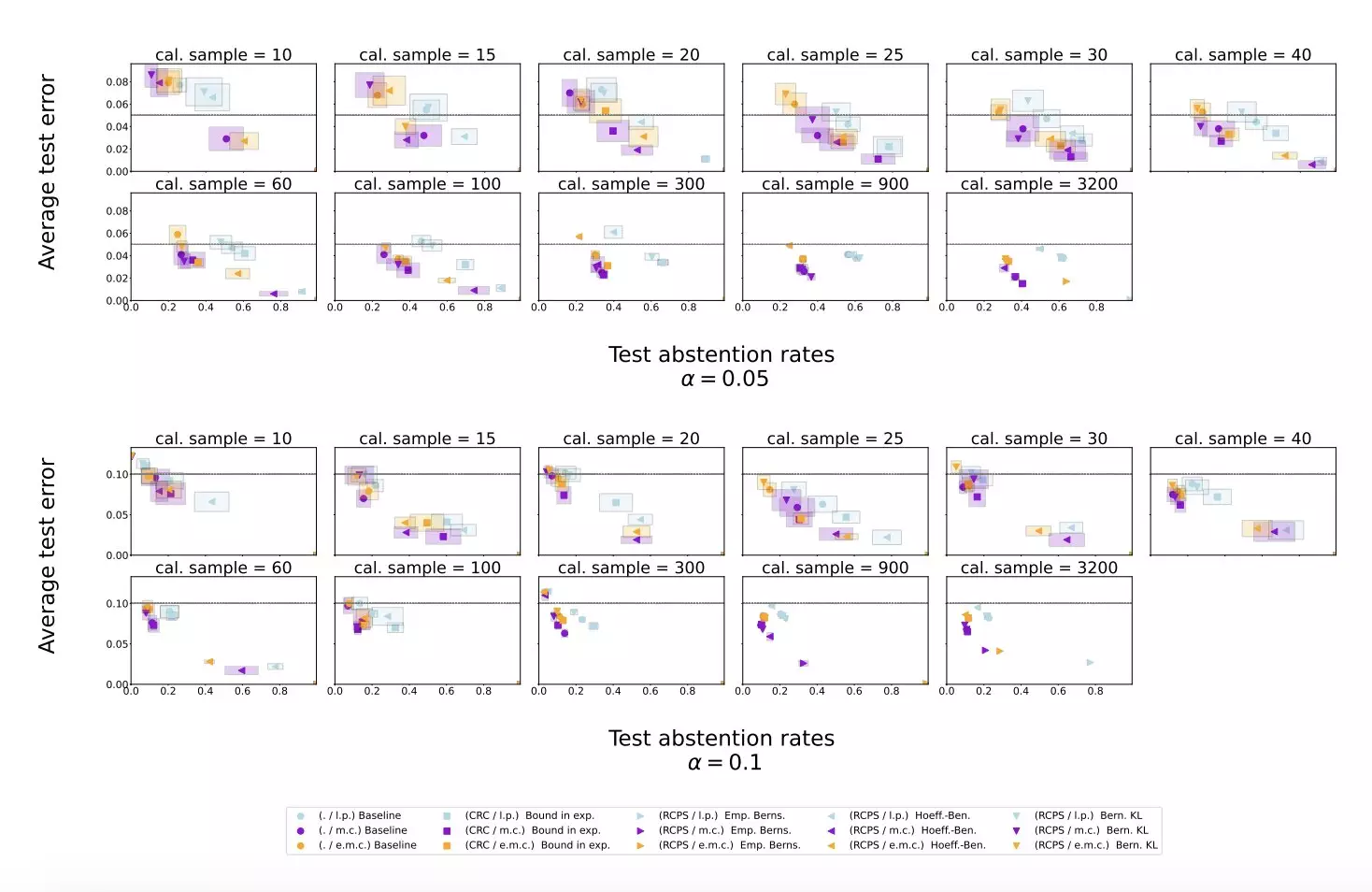

To evaluate the effectiveness of their proposed method, the researchers conducted a series of experiments using publicly available datasets such as Temporal Sequences and TriviaQA. By applying their approach to Gemini Pro, an LLM developed at Google, the team was able to demonstrate a significant reduction in hallucination rates while maintaining comparable performance on different types of datasets.

The results of the experiments conducted by the research team suggest that their approach to mitigating LLM hallucinations is effective in improving the reliability of these models. By allowing the LLM to abstain from answering a question when the response is likely to be nonsensical or untrustworthy, the proposed method outperforms simple baseline scoring procedures.

The recent study by DeepMind paves the way for the development of similar procedures aimed at enhancing the performance of LLMs and preventing them from hallucinating. These efforts are crucial in advancing the field of natural language processing and ensuring the widespread use of LLMs among professionals worldwide.

Leave a Reply