In recent years, unmanned aerial vehicles (UAVs), colloquially known as drones, have emerged as transformative tools for surveying and analyzing various environments. These flying robots are increasingly capable of collecting a wealth of data, which can be utilized to assemble intricate three-dimensional (3D) visual models of real-world spaces. However, the quest for effective and efficient methods for environment mapping is no trivial task. A notable advancement in this field comes from a collaboration between researchers at Sun Yat-Sen University and the Hong Kong University of Science and Technology, introducing an innovative framework called SOAR. This system significantly optimizes the autonomous exploration and reconstruction of physical spaces, showcasing potential applications that span urban planning to immersive video game design.

The emergence of SOAR underscores a growing demand for improved methodologies in 3D reconstruction using UAV technology. According to Mingjie Zhang, one of the co-authors of the research, the impetus behind the development of SOAR was the identified limitations seen in existing reconstruction techniques, predominantly categorized into model-based and model-free approaches. While model-based methods can often be too time-consuming and resource-intensive, model-free options tend to struggle with local planning limitations during the simultaneous exploration and reconstruction processes. The ambitious goal of the SOAR project is to integrate the strengths of both methodologies into a cohesive and highly functional system.

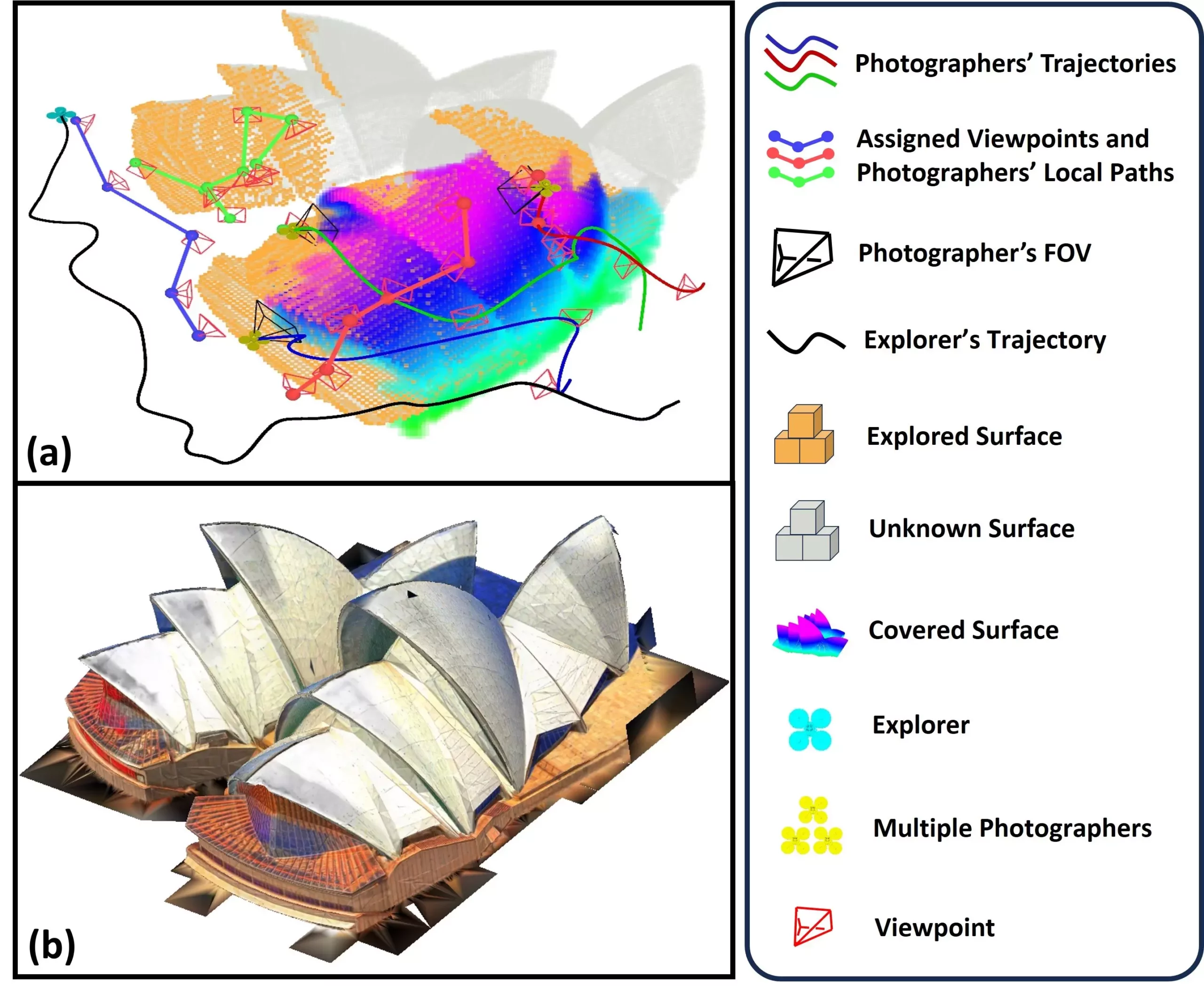

At its core, SOAR embodies a heterogeneous multi-UAV framework where a group of UAVs cooperates to explore and capture visual data of their environment. Its design is particularly notable for its incremental viewpoint generation technique that is dynamically adaptive, allowing real-time adjustments based on acquired scene information. This flexibility is further enhanced by a sophisticated task assignment strategy, which optimizes the roles and responsibilities of each UAV in the collection of data necessary for constructing accurate 3D models.

When deployed, SOAR operates through a systematic workflow that involves multiple UAVs working in concert. An “explorer” UAV, which is equipped with LiDAR technology, initiates the mapping process through a surface frontier-based strategy. Its fundamental role is to navigate the environment methodically while gathering spatial data. As it maps the terrain, SOAR’s framework is capable of incrementally generating viewpoints that provide comprehensive coverage for subsequent data collection. This leads to a division of labor where the other UAVs, equipped with visual recording capabilities, are tasked to visit designated sites to capture high-quality imagery.

The process utilizes a sophisticated clustering method, known as Consistent-MDMTSP, designed to assign viewpoints effectively to the ‘photographer’ UAVs. This method prioritizes an even distribution of workload while ensuring that tasks are carried out consistently, which is crucial for obtaining high-fidelity data for reconstruction purposes. The integration of both LiDAR and visual sensing is a cutting-edge feature of SOAR, significantly enhancing its ability to explore diverse environments swiftly and accurately.

Initial simulations conducted by Zhang and his team’s research indicate that SOAR outperforms several existing state-of-the-art reconstruction methods. These promising outcomes hint at SOAR’s potential utility across numerous domains. For instance, metropolitan planners could leverage this technology to create detailed 3D representations of urban environments, thereby facilitating more intricate designs and assessments of infrastructure. Additionally, SOAR holds considerable prospects in the realm of historical preservation, aiding in reconstructing and documenting cultural landmarks effectively.

Moreover, SOAR’s adaptability could prove invaluable in post-disaster assessments by enabling rapid evaluations of damage, essential for formulating timely recovery strategies. Beyond these critical applications, the entertainment industry may find novel uses for SOAR in crafting realistic virtual worlds, drawing inspiration from real urban layouts or natural landscapes.

As the research team envisions the future development of SOAR, they express enthusiasm about overcoming existing challenges that could impede its application in real-world scenarios. Plans are underway to bridge the so-called “Sim-to-Real Gap,” focusing on addressing issues like localization errors and disruptions in communication that often occur when deploying UAV systems in uncontrolled environments.

Further studies will delve into developing new task allocation strategies to enhance coordination among UAVs while expediting map generation efforts. The integration of scene prediction algorithms alongside active feedback mechanisms poised to dynamically adapt planning processes based on real-time data will ensure that SOAR remains at the forefront of autonomous UAV technology.

The SOAR framework represents a significant leap forward in the realm of UAV-based 3D reconstruction. Its innovative methodologies promise to revolutionize various domains, making it a pivotal development for both researchers and practitioners looking to harness the full capabilities of drone technology in mapping and monitoring environments.

Leave a Reply