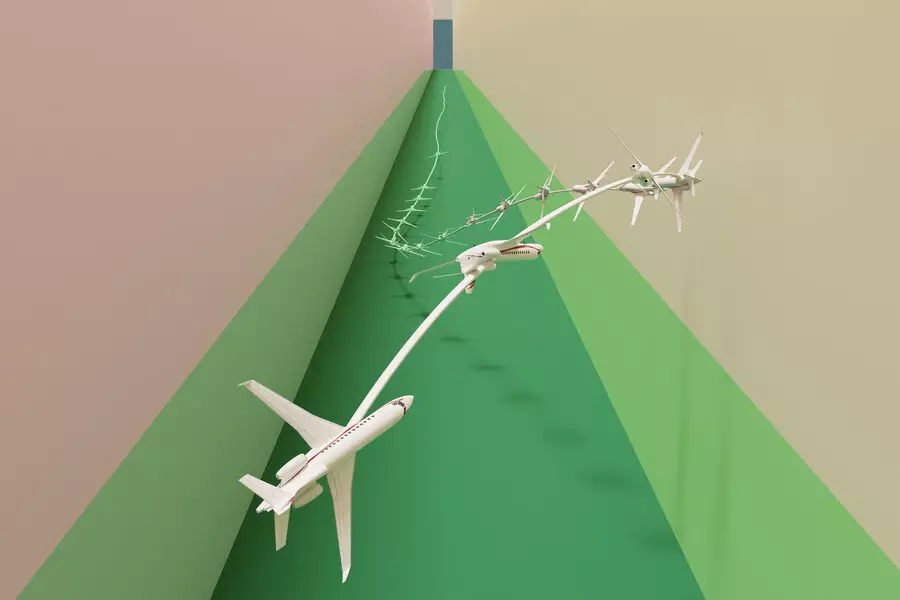

In the movie “Top Gun: Maverick,” Maverick, played by Tom Cruise, trains young pilots to fly their jets deep into a rocky canyon and avoid detection by radar. However, autonomous aircraft would struggle to complete this task because their “straightforward” path to the target would conflict with the need to avoid the canyon walls. To solve this problem, MIT researchers have developed a new technique that can solve complex stabilize-avoid problems better than existing methods.

Method

Existing methods to tackle stabilize-avoid problems often simplify the system to solve it with straightforward math. However, these simplified results do not hold up to real-world dynamics. More effective techniques use reinforcement learning, but finding the right balance between the two goals of remaining stable and avoiding obstacles can be tedious. The MIT researchers broke down the problem into two steps. Firstly, they reframed the stabilize-avoid problem as a constrained optimization problem. By applying constraints, they ensured that the agent avoided obstacles. Secondly, they reformulated the constrained optimization problem into a mathematical representation called the epigraph form and solved it using a deep reinforcement learning algorithm. The epigraph form allowed them to bypass the difficulties other methods faced when using reinforcement learning.

Testing

To test their approach, the researchers designed a number of control experiments with different initial conditions. For instance, in some simulations, the autonomous agent needs to reach and stay inside a goal region while making drastic maneuvers to avoid obstacles that are on a collision course with it. When compared with several baselines, their approach was the only one that could stabilize all trajectories while maintaining safety. The MIT researchers’ controller was able to prevent the jet from crashing or stalling while stabilizing to the goal far better than any of the baselines.

In the future, this technique could be a starting point for designing controllers for highly dynamic robots that must meet safety and stability requirements, like autonomous delivery drones. The algorithm could be implemented as part of a larger system, such as helping a driver safely navigate back to a stable trajectory on a snowy road. The researchers believe that a goal the field should strive for is to give reinforcement learning the safety and stability guarantees needed to provide assurance when controllers are deployed on mission-critical systems.

MIT researchers have developed a new technique that can solve complex stabilize-avoid problems better than existing methods. The researchers reframed the stabilize-avoid problem as a constrained optimization problem and reformulated it into a mathematical representation called the epigraph form. The researchers tested their approach by designing a number of control experiments with different initial conditions. In the future, this technique could be a starting point for designing controllers for highly dynamic robots that must meet safety and stability requirements. The researchers believe the goal should be to give reinforcement learning the safety and stability guarantees needed to provide assurance when controllers are deployed on mission-critical systems.

Leave a Reply