In a surprising turn of events, a recent study has shed light on the changing preferences of individuals when it comes to making redistributive decisions. The University of Portsmouth and the Max Planck Institute for Innovation and Competition conducted research to understand the public’s attitude towards AI versus human decision-making processes. The findings, published in the journal Public Choice, revealed a significant inclination towards artificial intelligence as the preferred decision-maker over humans.

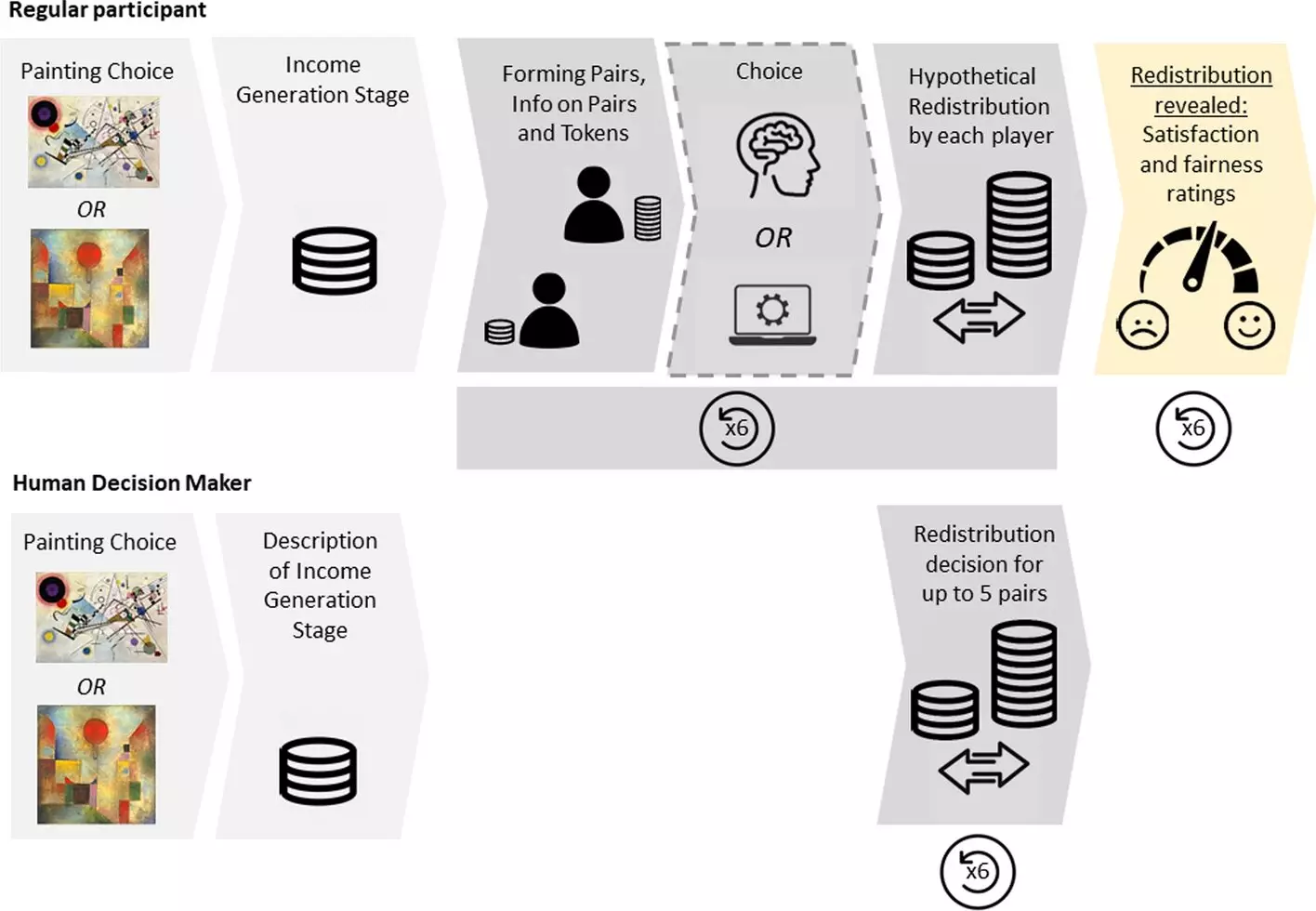

During an online experiment involving more than 200 participants from the U.K. and Germany, individuals were asked to choose between human decision-makers and algorithms (AI) in determining how earnings should be redistributed between two individuals. Surprisingly, over 60% of participants opted for AI over humans, regardless of the potential for discrimination. This newfound preference challenges the conventional belief that human decision-makers are more suitable for decisions involving moral components like fairness.

The Dilemma of Satisfaction and Fairness

Despite the overwhelming preference for algorithms, participants expressed dissatisfaction with the decisions made by AI compared to those made by humans. The subjective ratings of the decisions were influenced by individuals’ own material interests and fairness ideals. It was observed that while participants could tolerate deviations from their ideals to a certain extent, decisions that did not align with established fairness principles were met with significant negative reactions.

Dr. Wolfgang Luhan, the study’s corresponding author and Associate Professor of Behavioral Economics at the University of Portsmouth, emphasized the importance of transparency and accountability in algorithmic decision-making. While people are increasingly open to the concept of AI decision-makers due to their potential for unbiased decisions, the actual performance and the ability to explain decision-making processes are crucial for acceptance. Especially in moral decision-making contexts, the transparency and accountability of algorithms play a vital role in ensuring public trust and acceptance.

The study’s findings hint at a potential shift towards greater acceptance of algorithmic decision-makers in various sectors, including hiring, compensation, policing, and parole strategies. Many companies and public bodies are already leveraging AI for decision-making processes, and with improvements in algorithm consistency, public support for AI in morally significant areas could increase. The study highlights the evolving landscape of decision-making and the need for continued research and development in ensuring the efficacy and ethicality of AI-driven decisions.

The study’s findings offer valuable insights into the changing perception of decision-makers and the growing preference for artificial intelligence in redistributive decisions. As technology continues to evolve and integrate into various spheres of society, understanding the factors influencing public acceptance of AI and ensuring transparency and accountability will be critical in harnessing the full potential of algorithmic decision-making.

Leave a Reply