Cognitive flexibility is a key aspect of human intelligence that enables us to adapt to new situations, learn new skills, and engage in multitasking. While artificial intelligence has made significant advancements in recent years, it still lacks the same level of cognitive flexibility as humans. Understanding how biological neural circuits support cognitive flexibility can provide insights into developing more flexible AI systems. Recent research has focused on studying neural computations using artificial neural networks, with the goal of improving their flexibility in handling multiple tasks.

A research group at New York University, Columbia University, and Stanford University made a breakthrough in 2019 by training a single neural network to perform 20 related tasks. In a recent study published in Nature Neuroscience, researchers at Stanford delved into the mechanisms that enable neural networks to perform modular computations. By identifying computational substrates known as “dynamical motifs,” they aimed to uncover the underlying processes driving cognitive flexibility in artificial neural networks.

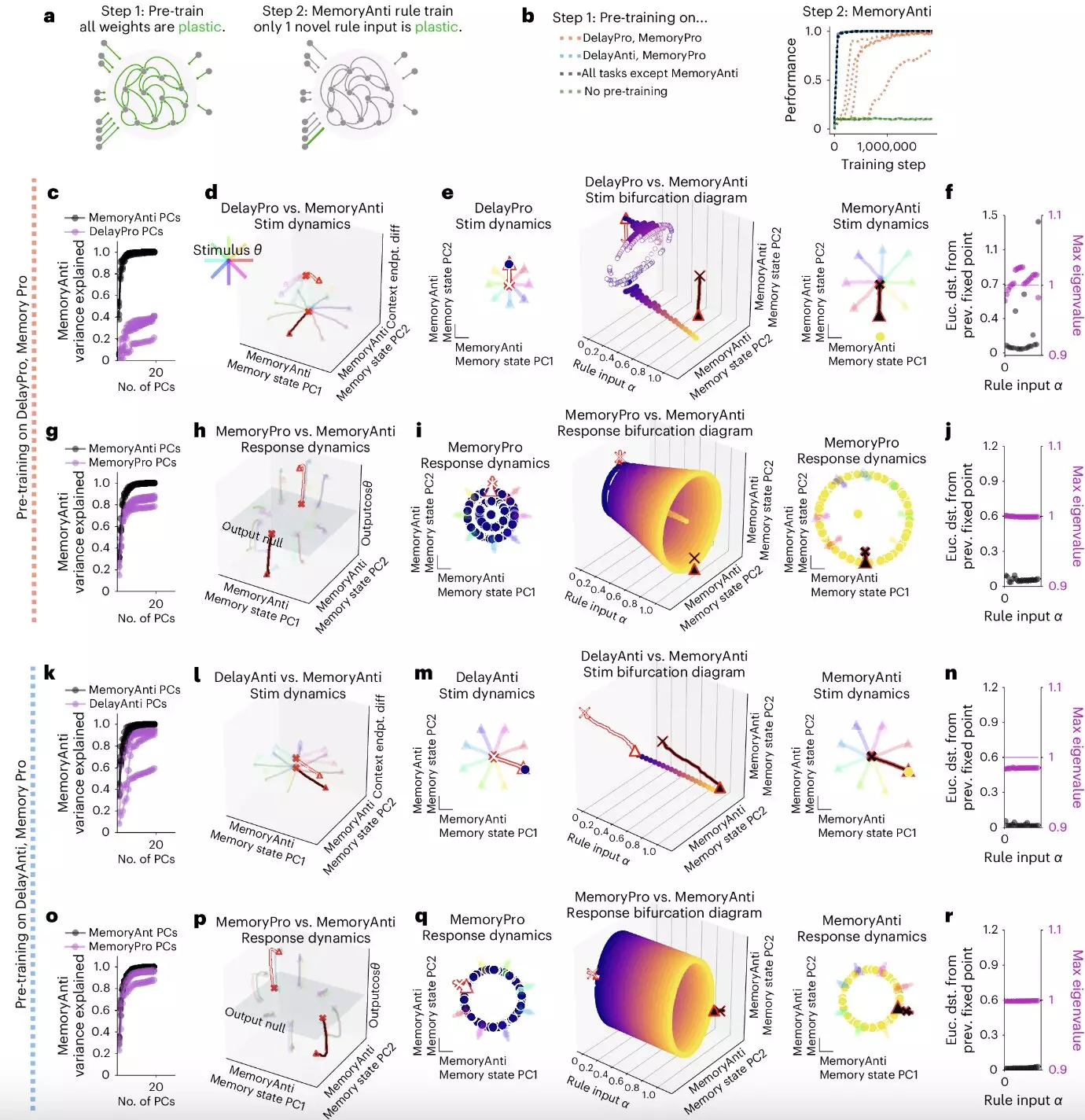

The study by Driscoll, Shenoy, and Sussillo uncovered that dynamical motifs are recurring patterns of neural activity that facilitate specific computations through network dynamics. These motifs, such as attractors, decision boundaries, and rotations, were found to be reused across tasks, indicating a modular subtask structure in the neural network. Notably, tasks requiring memory of continuous circular variables repurposed the same ring attractors, highlighting the adaptive nature of these networks in handling different tasks.

The researchers’ analyses revealed that dynamical motifs in convolutional neural networks are implemented by clusters of units with positive activation functions. Lesions to these units were shown to impede the networks’ ability to perform modular computations, underscoring the critical role of these motifs in task flexibility. Furthermore, the researchers observed that motifs were reconfigured for fast transfer learning after an initial learning phase, suggesting a dynamic adaptation process in the neural network.

The findings of this study establish dynamical motifs as a fundamental unit of compositional computation, bridging the gap between individual neurons and network behavior. This framework not only sheds light on the mechanisms underlying cognitive flexibility but also has implications for broader neuroscience and computer science research. By understanding how neural networks contextually reconfigure for different tasks, researchers can pave the way for more flexible and adaptable artificial intelligence systems.

The investigation into dynamical motifs in neural networks offers valuable insights into enhancing cognitive flexibility in artificial intelligence. By studying how these motifs enable modular computations and rapid task switching, researchers are advancing our understanding of the complex mechanisms that underlie intelligent behavior. The integration of dynamical motifs into artificial neural networks holds promise for developing more versatile AI systems capable of tackling a diverse range of tasks. As research in this field progresses, we may unlock new strategies for emulating the cognitive flexibility seen in biological neural circuits, leading to transformative advancements in AI technology.

Despite the term "rare," rare earth metals (REMs) are not nearly as scarce as their…

A collaboration led by Rutgers University-New Brunswick has initiated a paradigm shift in our understanding…

Natural gas leaks are a growing concern in both urban and rural settings, with potential…

Recent groundbreaking research at the University of Vienna has unveiled a novel interplay of forces…

In recent years, perovskites have garnered significant attention in the fields of materials science and…

For decades, astronomers have probed the depths of the Milky Way, grappling with two perplexing…

This website uses cookies.