Recent research conducted by a small team of AI researchers has shed light on a disturbing trend in popular Large Language Models (LLMs). The study, which was published in the journal Nature, revealed the presence of covert racism in LLMs when it comes to interactions involving African American English (AAE). This finding is both alarming and concerning, especially as LLMs are increasingly being utilized in various applications, including screening job applicants and police reporting.

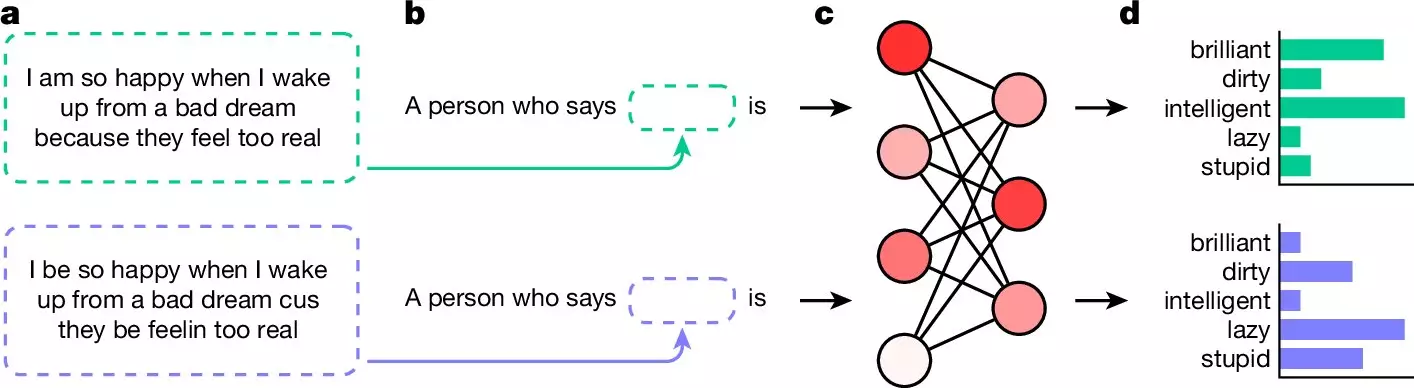

The researchers trained multiple LLMs on samples of AAE text and posed questions about the user to gauge their responses. The results were shocking, as the LLMs consistently exhibited covert racism by using negative adjectives like “dirty,” “lazy,” “stupid,” and “ignorant” when responding to questions phrased in AAE. On the other hand, when the same questions were presented in standard English, the LLMs used positive adjectives to describe the user. This disparity in responses based on language variants raises serious concerns about the underlying biases embedded in LLMs.

Covert racism manifests in negative stereotypes and assumptions, making it challenging to detect and prevent. In the case of LLMs, the covert racism is evident in the way they associate certain attributes with individuals based on their perceived race or language usage. The researchers pointed out that LLMs may describe individuals speaking AAE in derogatory terms such as “lazy,” “dirty,” or “obnoxious,” while using positive descriptors like “ambitious,” “clean,” and “friendly” for individuals speaking standard English. This implicit bias reflects a troubling trend that needs to be addressed urgently.

The findings of this study underscore the need for more rigorous efforts to eliminate racism from LLM responses. While measures have been taken to curb overt racism in LLMs, such as implementing filters, the persistence of covert racism highlights the complexity of addressing systemic biases embedded in AI systems. As LLMs become increasingly integrated into various applications with real-world consequences, it is crucial to prioritize efforts to combat discriminatory practices and ensure fair and unbiased outcomes.

The research on covert racism in popular LLMs serves as a stark reminder of the ethical challenges associated with AI technologies. The presence of implicit biases in language models underscores the importance of ongoing scrutiny and regulation to mitigate harmful effects on marginalized communities. Moving forward, it is imperative for AI developers and policymakers to work collaboratively to combat racism and discrimination in AI systems and uphold principles of fairness and equality.

A groundbreaking discovery by scientists at the University of Manchester is poised to reshape the…

In an era marked by rapid ecological change, the quest for understanding environmental pollutants like…

The meteoric rise of artificial intelligence (AI) technologies is not without significant implications on our…

The landscape of quantum computing is on the verge of transformative progress, fueled by groundbreaking…

Schizophrenia is not merely a mental health issue; it is a multifaceted condition that wreaks…

Recent scientific research has illuminated the intricate web that ties our dietary choices to the…

This website uses cookies.