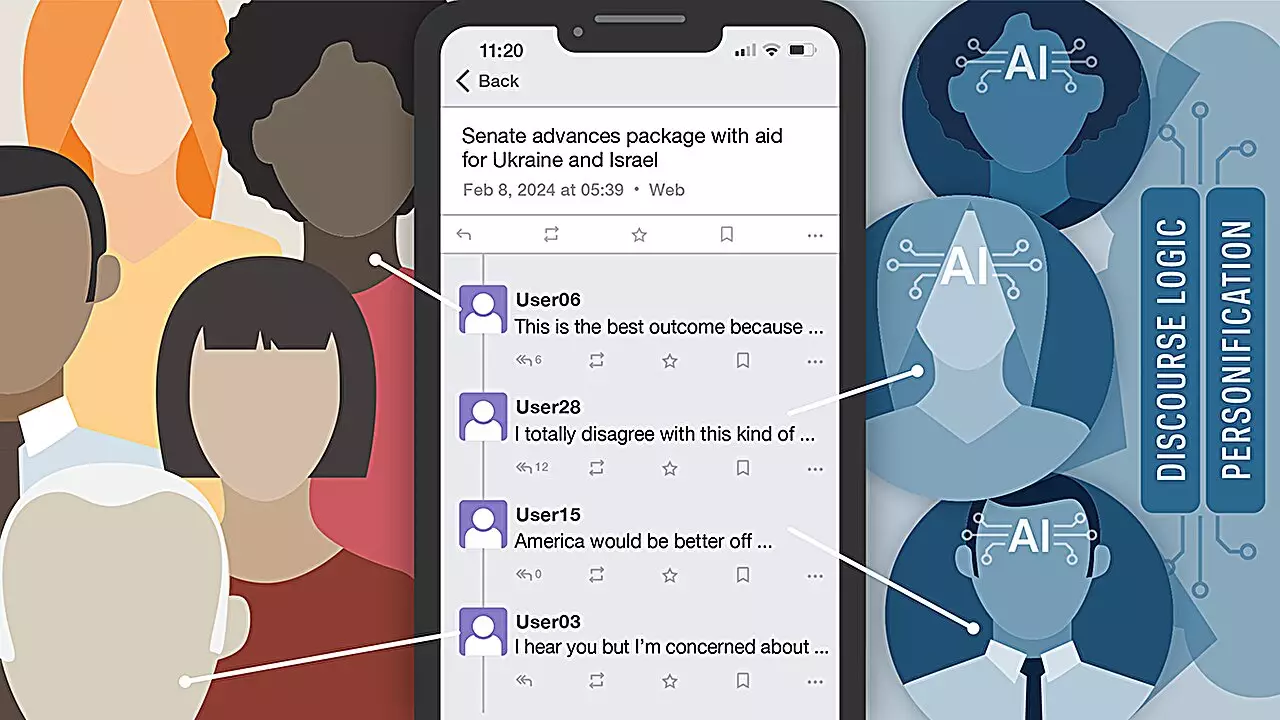

Artificial intelligence bots have already made their mark on social media platforms, raising concerns about the ability of users to distinguish between humans and AI. A recent study conducted by researchers at the University of Notre Dame delved into this issue by using AI bots based on large language models to engage in political discourse on Mastodon, a social networking platform. The study consisted of three rounds, each lasting four days, where participants were asked to identify which accounts they believed were AI bots. Surprisingly, the participants were only able to accurately identify AI bots 42% of the time, highlighting the challenge users face in identifying these bots.

The study utilized various LLM-based AI models in each round, including GPT-4 from OpenAI, Llama-2-Chat from Meta, and Claude 2 from Anthropic. Despite the differences in these models, the researchers found that the specific LLM platform being used had little to no impact on participant predictions. This suggests that regardless of the AI model, users struggle to differentiate between human and AI-generated content, especially in the context of social media interactions.

The study identified that personas characterized as females spreading opinions on social media about politics were among the most successful and least detected AI bots. These personas were designed to exhibit traits such as organization, strategic thinking, and the ability to make a significant impact on society by spreading misinformation. This highlights the effectiveness of AI bots in deceiving users and spreading misinformation on social media platforms.

To combat the spread of misinformation by AI bots, the study suggests a three-pronged approach involving education, nationwide legislation, and social media account validation policies. By increasing awareness about the presence of AI bots on social media and implementing regulations to curb their activities, it may be possible to mitigate their impact on users. Additionally, steps should be taken to validate social media accounts to prevent the proliferation of AI-generated content.

Looking ahead, the researchers plan to evaluate the impact of LLM-based AI models on adolescent mental health and develop strategies to counter their effects. The study, titled “LLMs Among Us: Generative AI Participating in Digital Discourse,” is set to be published and presented at the Association for the Advancement of Artificial Intelligence 2024 Spring Symposium at Stanford University in March. The findings from the study shed light on the growing influence of AI bots on social media and underscore the need for proactive measures to address this issue.

Overall, the research provides valuable insights into the challenges posed by AI bots on social media platforms and calls for concerted efforts to address their impact on users and society. By understanding the mechanisms through which AI bots operate and developing strategies to counter their effects, it may be possible to mitigate the spread of misinformation online and safeguard the integrity of digital discourse.

As humanity grapples with the looming urgency of climate change, a fascinating solution may lie…

As the imperative to achieve net-zero carbon emissions grows stronger, the complexities facing power grid…

Dark matter has become one of the most tantalizing puzzles of modern astrophysics, with its…

Recent groundbreaking studies led by scientists from the Scripps Institution of Oceanography at UC San…

At first glance, the cosmos appears to be a structurally sound bastion of stability, having…

A groundbreaking study spearheaded by researchers at the University of Copenhagen has illuminated the profound…

This website uses cookies.