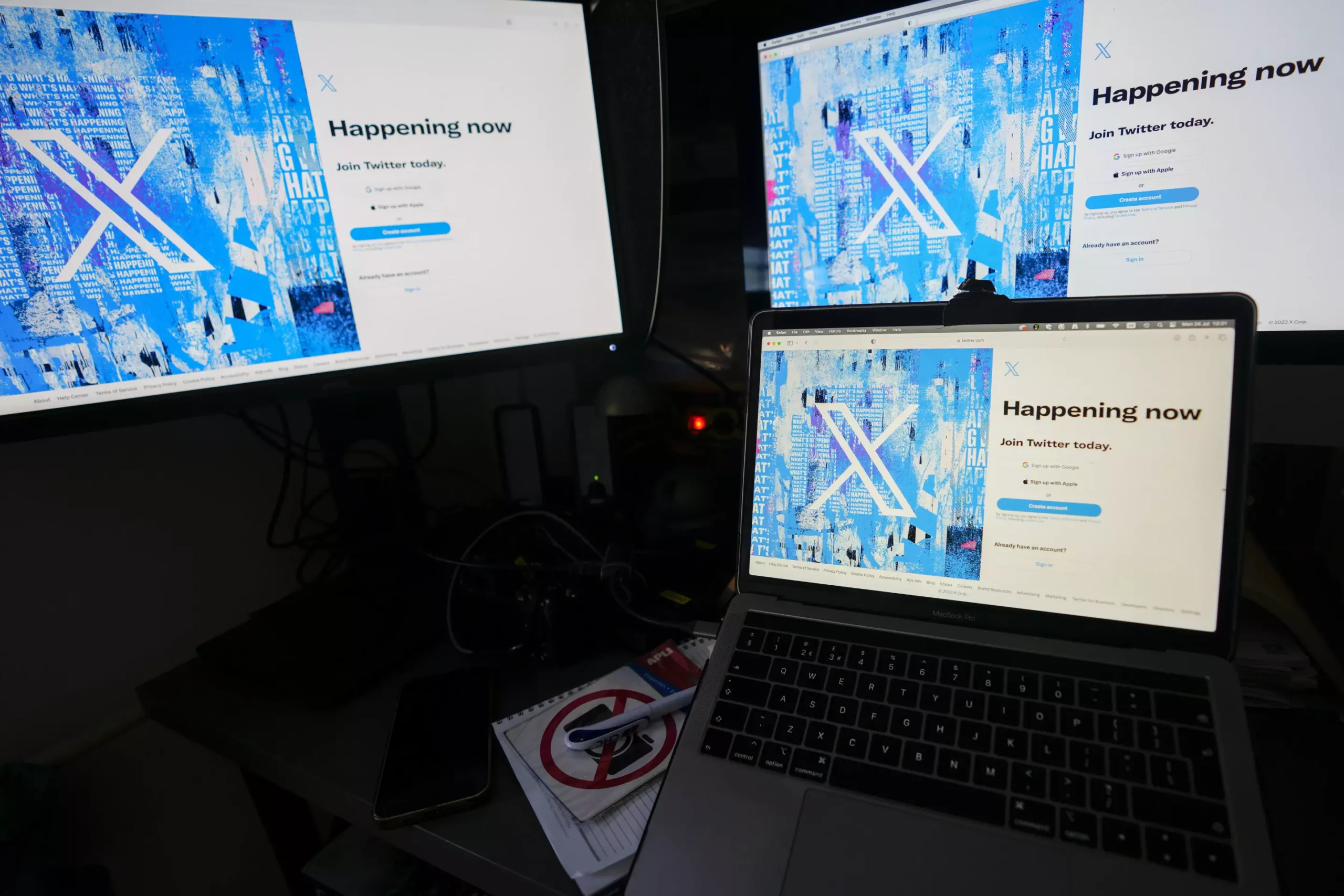

X, the social media platform formerly known as Twitter, has shifted its approach to transparency in content moderation since being acquired by Elon Musk. In a recently released transparency report, the platform documented significant escalations in its content moderation efforts in the first half of the year. This marks the company’s first comprehensive disclosure post-acquisition, shedding light on its strategies and the profound changes that have occurred under Musk’s leadership.

The report reveals an alarming increase in account suspensions, with nearly 5.3 million accounts being suspended in the first half of the year compared to just 1.6 million in the same period of 2022. This stark contrast highlights a shift towards a more aggressive stance on moderation, claiming to uphold community standards but raising questions about the implications for free speech on the platform. The surge in suspensions also reflects the challenges X faces in managing a space rife with misinformation and polarizing content.

Moreover, X has seen a staggering 10.6 million posts removed or labeled for violating its policies. This includes 5 million flagged due to the platform’s “hateful conduct” policy. The distinctions in categories—like violent content, abuse, and harassment—illustrate the multifaceted nature of harmful content plaguing social media platforms today. However, one notable aspect of the report is X’s failure to delineate the specifics between posts that were simply labeled versus those that were removed entirely, creating a cloud of ambiguity around the effectiveness of these measures.

Critics have expressed profound concern over Musk’s influence on the platform, arguing that he has transformed it from a community-driven space into one characterized by chaos and toxicity. Unlike traditional moderation approaches, Musk’s personal history of sharing conspiracy theories and engaging in public spats with political figures has cast a shadow over the platform’s credibility. Currently, X faces a ban in Brazil, a situation rooted in the contentious dialogue over free speech and misinformation, further complicating its operational landscape.

The report highlights X’s dual approach to enforcement: leveraging machine learning alongside human moderators. While automation can streamline the process of flagging harmful content, the reliance on algorithms raises concerns about accuracy and overreach. Musk’s acquisition came with promises of enhancing the platform’s role as a space for free speech, yet the implications of aggressive moderation indicate a possible paradox in ensuring both safety and free expression.

As X continues to navigate the complex dynamics of content moderation, the need for a balanced approach becomes increasingly clear. While stringent measures are crucial in combating harmful content, a transparent and fair system must be prioritized to ensure users feel protected yet free to express themselves. The challenge ahead will be determining how to foster a safe online environment without stifling the very essence of open dialogue that social media platforms are meant to uphold.

Rogue waves have long been a subject of fascination and terror in maritime lore. These…

As the world grapples with public health challenges, especially those posed by infectious diseases, the…

The Sombrero Galaxy, also known as Messier 104, embodies a breathtaking blend of spirals and…

In recent advances in quantum electronics, a groundbreaking discovery leveraging the concept of kink states…

In the intricate tapestry of nature, ice often exists in a delicate balance with liquid…

In an astonishing event that captured global attention, a rogue object from beyond our Solar…

This website uses cookies.