The advent of artificial intelligence (AI) has ushered in an era of rapid technological advancements, yet it has also raised critical concerns regarding ethical usage and safety. In response to these challenges, the European Union implemented the AI Act on August 1st, marking a significant legal step intended to govern the deployment and development of AI technologies across its member states. This comprehensive regulation aims to address the potential risks associated with AI, particularly in sensitive sectors such as healthcare, employment, and education. With the complexity of this regulation, a key question arises: how will it impact the day-to-day responsibilities of programmers and software developers who are at the forefront of AI innovation?

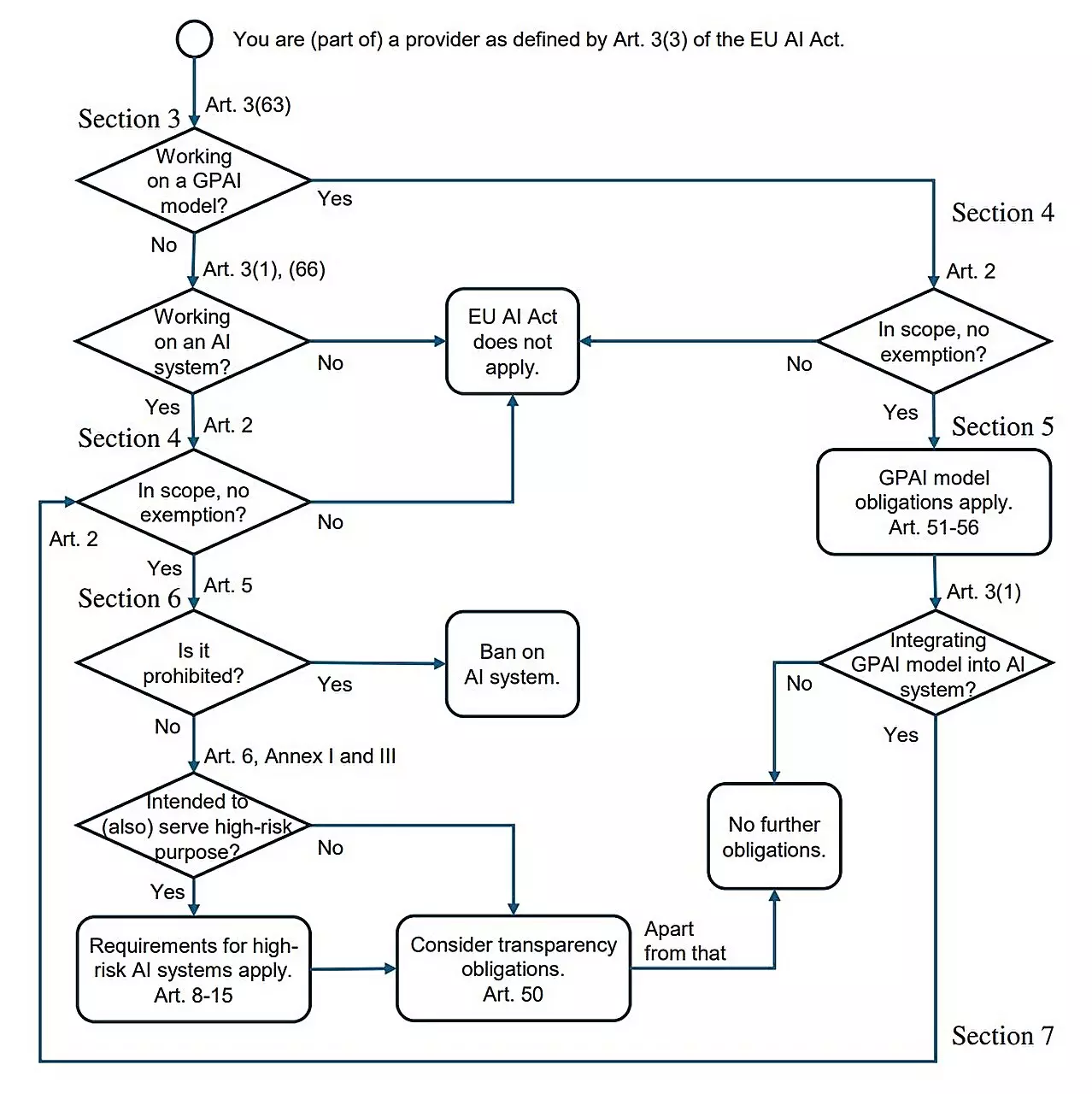

One of the most pressing concerns expressed by programmers in light of the new AI regulations is the sheer volume of content present in the 144-page document. Many developers lack the time or expertise to digest such detailed legislation, prompting a need for clear and concise interpretations relevant to their work. A research initiative led by Holger Hermanns from Saarland University and Anne Lauber-Rönsberg from TU Dresden aims to bridge this gap. Their forthcoming paper on the topic, titled “AI Act for the Working Programmer,” aspires to distill the AI Act’s provisions into actionable insights for practitioners, elucidating the key facets that developers must prioritize.

The AI Act distinctly categorizes AI applications based on their potential risk levels, establishing stringent guidelines primarily for “high-risk” systems. These include technologies that influence crucial life decisions, such as job applications, credit ratings, and medical diagnoses. The primary objective of these regulations is to mitigate discriminatory practices and ensure fairness in how AI systems operate. For instance, developers creating software for screening job applications must comply with the AI Act’s demand for unbiased training data, ensuring that historical biases do not propagate through the algorithms they build.

Conversely, developers of AI systems that engage in less impactful activities—like those found in gaming or spam filtering—face comparatively fewer constraints. Such applications can continue their development without the extensive documentation and oversight mandated for their high-risk counterparts. This bifurcation within the AI Act signifies a balanced approach: promoting innovation in low-risk areas while enforcing accountability where the stakes are significantly higher.

A crucial aspect of the AI Act is the emphasis on accountability through documentation and oversight. For high-risk AI systems, developers are required to maintain meticulous logs similar to the black box recorders used in aviation. This practice not only facilitates transparency but also aids in auditing AI behaviors and decision-making processes. Such documentation ensures that users can reconstruct events and address errors effectively, thereby instilling confidence in AI applications.

The developers must also produce user manuals detailing the functionality of their systems. This requirement promotes usability and understanding among the deployers, enabling them to monitor AI implementations with greater awareness. Consequently, while the regulatory landscape introduces notable constraints, it simultaneously provides a framework designed to protect users and ensure ethical practices.

From the developers’ perspective, the AI Act presents both challenges and opportunities. On the one hand, understanding and integrating compliance into AI systems introduces an additional layer of complexity to software development. On the other hand, these regulations can enhance consumer trust in AI technologies, ultimately benefiting developers through wider adoption of their products. The findings from Hermanns and his team suggest that many programmers may not feel an immediate impact, especially if their work does not engage with high-risk applications.

While regulatory frameworks typically invoke apprehension, the AI Act may serve to standardize practices across Europe, enabling collaboration and innovation while reinforcing ethical considerations within technology development. Additionally, since the Act does not impose restrictions on research and development conducted in private or public sectors, the door remains open for continued progress in AI technologies.

The EU’s AI Act is a pioneering piece of legislation that highlights the need for regulatory frameworks in an increasingly AI-driven world. By delineating high-risk systems and adopting a proportionate approach to regulation, the law seeks to forge a balanced pathway towards innovation and safe AI usage. As the industry adapts to these regulations, the continuous dialogue among lawmakers, developers, and researchers will be crucial to ensuring that AI advances responsibly and ethically. In the larger context, this legislation may position Europe as a leader in setting global standards for AI governance, fostering both trust and innovation in the multifaceted landscape of artificial intelligence.

Cells form the foundation of all living organisms, and gaining insights into their inner workings…

Mosquitoes are not just an irritating nuisance; they are deadly vectors that transmit a range…

In the quest for sustainable living, consumers often hold fast to the belief that glass…

For over a century, the astral mystery surrounding Barnard's Star, a unique red dwarf just…

In the realm of catalysis, particularly in the context of oxygen evolution reactions (OER), understanding…

Recent research has illuminated a groundbreaking connection between blood donation frequency and the health of…

This website uses cookies.