Artificial intelligence (AI) has revolutionized numerous sectors, from healthcare to finance, thanks to its ability to process vast amounts of data efficiently. However, AI models like ChatGPT, which rely heavily on data-driven algorithms and machine learning techniques, face significant challenges in their data-processing capabilities. A recent study, led by Professor Sun Zhong from Peking University’s School of Integrated Circuits and Institute for Artificial Intelligence, aims to address one of the critical limitations of contemporary computing architectures: the von Neumann bottleneck.

At its core, the von Neumann bottleneck refers to the constraint in speed and efficiency that arises from the separation of processing and memory units in traditional computer architectures. In AI and machine learning, where immense datasets are routinely processed, this bottleneck can lead to delays in computation due to the time it takes to transfer data between memory and processing units. As datasets continue to grow exponentially, the challenge of overcoming the von Neumann bottleneck has become increasingly urgent.

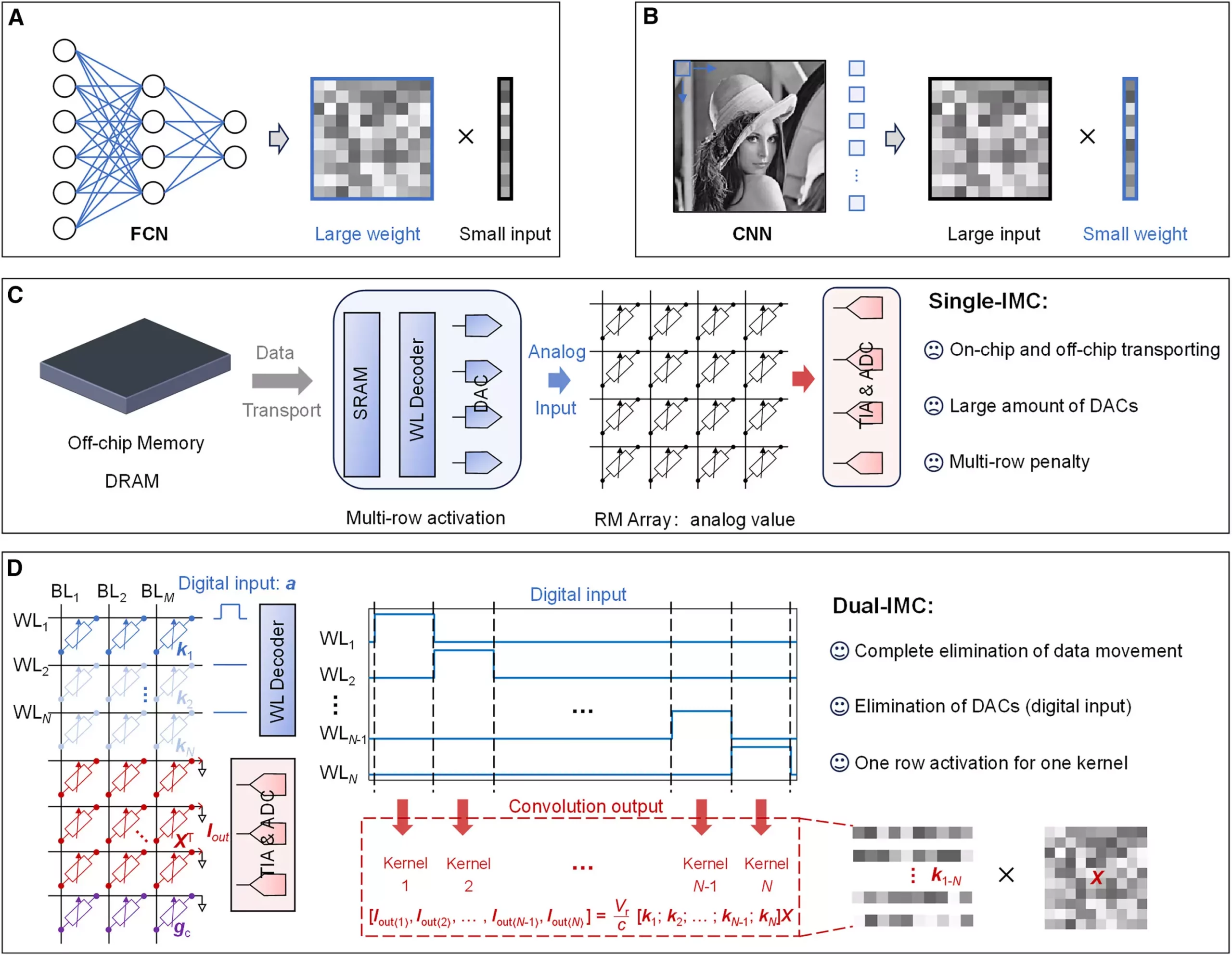

Conventional solutions to this problem have included the implementation of single in-memory computing (single-IMC) schemes. In these setups, neural network weights are stored on a memory chip, while external inputs—such as images—are processed separately. While this method marks an improvement, it still involves significant inefficiencies related to the transportation of data, necessitating the use of digital-to-analog converters (DACs). These components not only add complexity to circuit design but also consume power, thereby increasing operational costs and limiting performance.

The research team led by Professor Sun Zhong has proposed a novel solution: the dual-IMC (in-memory computing) scheme. This innovative approach addresses the limitations of single-IMC schemes by allowing storage of both the neural network’s weights and its input data within the memory array itself. By centralizing data processing in this way, the dual-IMC scheme is designed to optimize performance and energy consumption, effectively facilitating fully in-memory computation.

Testing the dual-IMC concept has shown promising results when applied to resistive random-access memory (RRAM) devices, particularly in signal recovery and image processing tasks. The implications of this research extend beyond mere performance enhancements; they point toward a potential shift in how future AI models could be developed and executed.

The advantages of transitioning to a dual-IMC framework are manifold. Firstly, fully in-memory computations significantly enhance efficiency by eliminating the delays associated with off-chip dynamic random-access memory (DRAM) and on-chip static random-access memory (SRAM). This improvement not only accelerates processing speeds but also conserves energy, an essential consideration in today’s eco-conscious technological landscape.

Moreover, a notable reduction in production costs accompanies the adoption of dual-IMC systems. By eliminating the need for DACs, the architecture requires less physical space on chips, resulting in decreased manufacturing complexity and associated costs. This also leads to lowered power requirements and computing latency, further enhancing overall system performance.

As the demand for sophisticated data-processing capabilities continues to escalate in our increasingly digitized world, the advancements represented by the dual-IMC scheme hold immense promise. They not only pave the way for breakthroughs in AI but also suggest a fundamental rethinking of computing architecture as a whole.

The team’s findings offer significant insights that could reshape the future of artificial intelligence and machine learning. By overcoming the von Neumann bottleneck, researchers like Professor Sun Zhong are not merely addressing current limitations; they are setting the stage for a new era of computing that prioritizes efficiency, cost-effectiveness, and enhanced capabilities in data processing—all critical factors in the ongoing evolution of AI technology.

In the realm of software development, the ability to swiftly and accurately address bugs is…

The realm of quantum computing and communication is not just an abstract dream anymore; it…

In a remarkable leap for the field of material science, a collaborative research initiative has…

Throughout Earth's vast history, our planet has endured five major mass extinction events that reshaped…

Rainfall is a vital element of our planet’s hydrological cycle, yet many aspects of its…

On a night when the universe aligns, a mesmerizing phenomenon awaits: the appearance of the…

This website uses cookies.